Blog

Building Feature Stores with Redis: Introduction to Feast with Redis

A feature store is a centralized place where data scientists from different teams across your organization can share features for machine learning. The feature store allows them to search, reuse, and serve features in production at scale. As MLOps matures, feature stores are becoming a cornerstone of machine learning platforms for a few reasons:

- Features are at the core what makes ML systems effective. Engineering these features is complicated and takes a tremendous amount of time. Features stores save time and improve ML system outcomes by allowing engineers to reuse features across different ML use cases.

- Feature stores let engineers reuse the same features across training and production, ensuring consistency and reproducibility of the engineered feature.

- Low-latency feature serving is key for real-time use cases. Feature stores serve features to ML models from an online store for real-time inference with low latency.

Redis powers online feature stores

When companies need to deliver real-time, ML-based applications to support high volumes of online traffic, Redis is most often selected as the foundation for the online feature store, thanks to its ability to deliver ultra-low latency with high throughput at scale. We’re seeing ML cloud platform providers offer feature stores using Redis, as in the recent examples of H2O AI Feature Store and Microsoft’s Azure Feature Store. And we’re also seeing a host of build-your-own implementations of feature stores using Redis at a variety of companies including Wix, Swiggy, Comcast, Zomato, AT&T, DoorDash, iFood, and Uber.

These companies have huge datasets, with hundreds to thousands of features feeding ML systems of massive scale. Their Redis-backed feature stores support time-sensitive, mission-critical business applications, many for recommendation and fraud detection, using real-time data.

Open source feature store implementations such as Feast are also choosing Redis. Redis was selected as Feast’s online store for real-time use cases at scale, first by the Indonesian ride-hailing company Gojek (who was the initial creator of Feast in collaboration with Google), and later by Feast’s early adopters, such as Udaan and Robinhood. In addition to that, Redis was selected by Microsoft for the online store of its new Azure Feature Store with Feast (stay tuned for more details on that soon!).

To provide a better understanding of how feature stores work, and why Redis is such a key feature store component, we’re going to use the rest of this article to introduce Feast and show how you can use it to build your own feature store with Redis.

What is Feast?

Feast (Feature store) is an open source feature store that’s part ofthe Linux Foundation’s AI & Data Foundation. Feast can serve features from a low-latency online store or from an offline store, while also providing a central registry, storage, and serving. This allows ML engineers and data scientists to discover the relevant features for ML use cases and serve them in production.

Feast is built in a modular way so that you can adopt all or some of its components. Because Feast is open source, you can deploy a Feast Feature Store and customize it for your own needs, without having to start building a feature store from scratch. Companies who choose Feast with Redis for their feature store have significantly shortened development time and effort, as compared to building out their own feature store. In the next section, we’ll go over Feast’s key components.

Feast architecture and key components

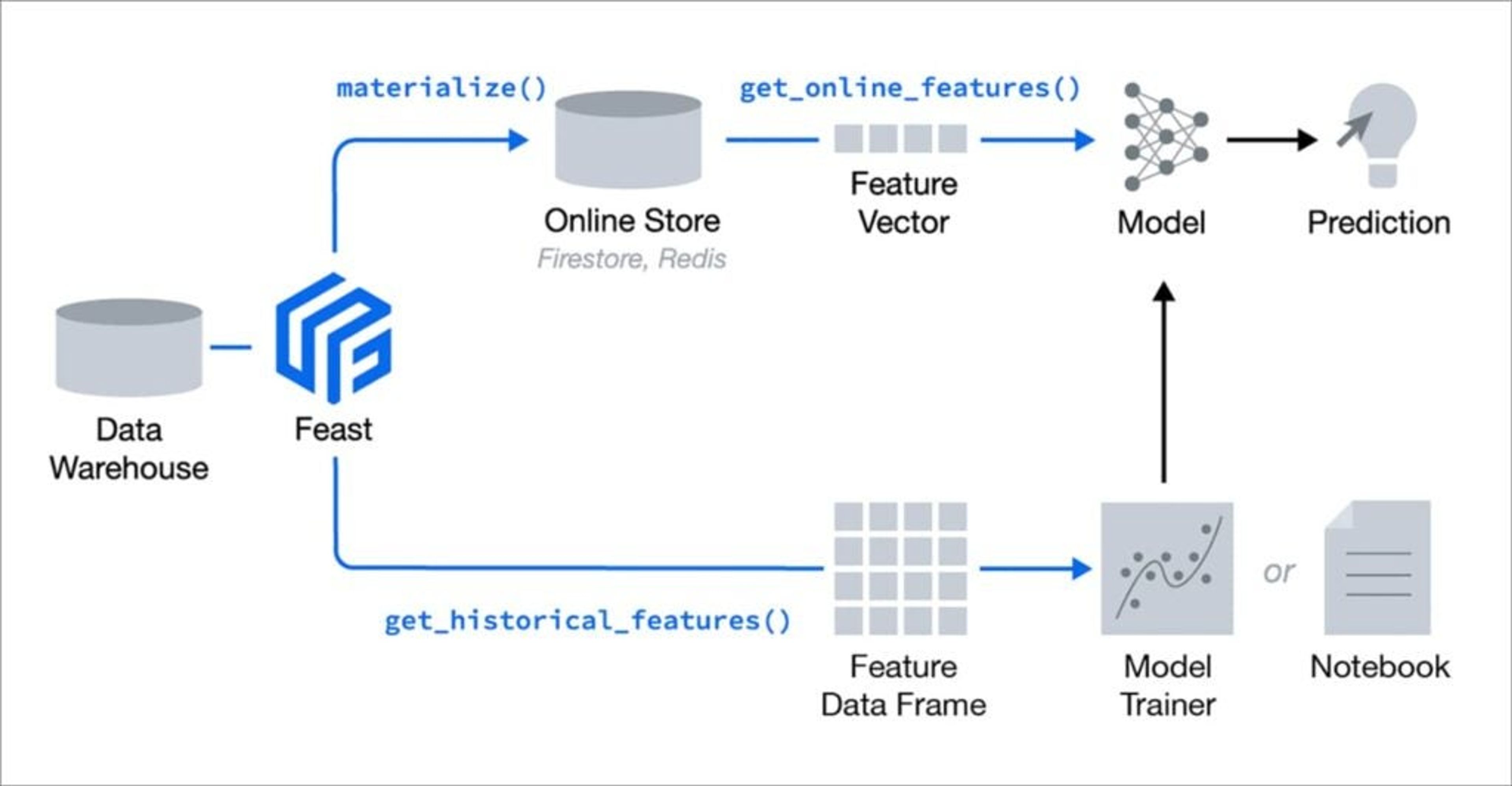

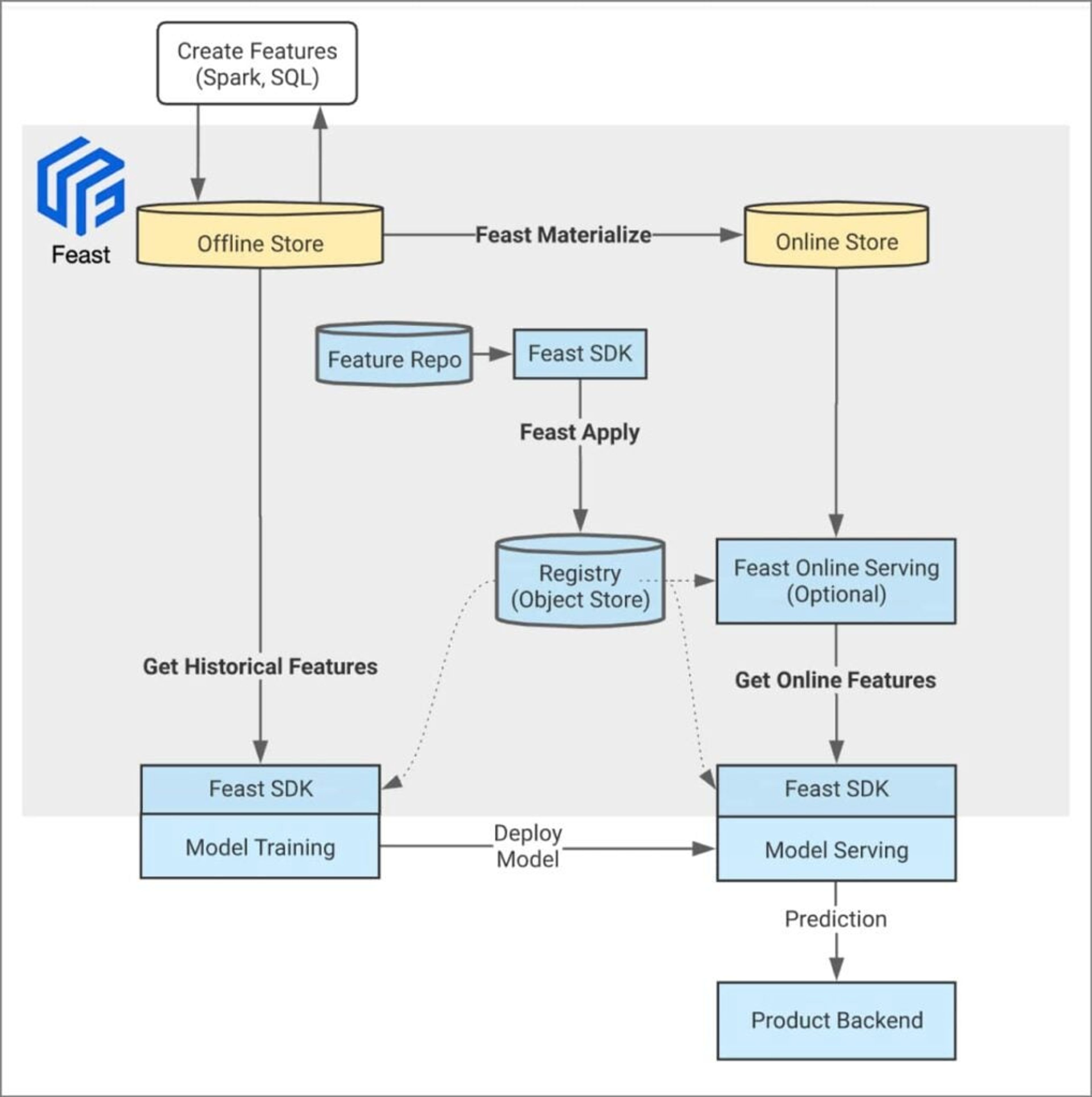

If you look at the Feast architecture diagram below, you’ll notice several key components:

Feast registry: Feast registry is an object store-based registry, which is a central catalog of all of the feature definitions and their related metadata. This registry allows data scientists to search, discover, and collaborate on new features. The registry also allows for programmatic access through the Feast SDK.

Feast Python SDK/CLI: The SDK is the primary user-facing tool for managing version-controlled feature definitions, materializing (load) feature values into the online store, building and retrieving training datasets from the offline store, and serving online features.

Online Store: The online store is a database that stores only the latest feature values for each entity. The online store provides low-latency online feature value lookups. Feast allows users to load or materialize their feature data into an online store in order to serve the latest features to models for online prediction.

Offline Store: Offline stores maintain a record of historic time-series feature values. The offline store persists batch data that has been ingested into Feast. This data is used for producing training datasets. Feast does not manage the offline store directly, but runs queries against it.

The high-level architecture diagram above describes the following flow, as an example:

- A data/ML engineer creates the features using their preferred tools. These features are ingested into the offline store.

- The data/ML engineer (or CI/CD process) can persist the feature definitions into a central registry.

- The data/ML engineer (or CI/CD process) can materialize (load) features into Redis (online store).

- The ML engineer or data scientist consumes the offline features to train a model.

- The ML engineer or data scientist deploys the model for production for serving.

- The backend system makes a request to the inference server endpoint, which makes a request to Redis, the online store, to get the online features.

You can find more details on Feast, including its concepts, architecture, and releases, at feast.dev. Next, let’s see how to quickly get started with building your own feature store using Feast with Redis.

Start using Feast with Redis

Choosing Redis as the online store for Feast (for Feast versions >= v0.11) takes just a couple of lines of configuration: Define the online_store in the Feast YAML configuration file, setting the type and connection_string values in feature_store.yaml as follows:

By adding these two lines for online_store (type: redis, connection_string: localhost:6379) in the YAML configuration file, Feast will use Redis as its online store.

We just showed how to connect a single Redis instance to Feast. If you’re using a Redis open source cluster with SSL enabled and password authentication, you’ll need to use a different connection_string value.

See the Feast documentation for a full listing of the configuration options for Redis. You can also consult the Feast online store format to better understand the data model used to store feature values in Redis.

How to learn more

At Redis, we’re committed to making Feast faster and more reliable for delivering real-time ML use cases at scale. For the recent Feast v0.14 release, we were thrilled to help the core online serving path become 30% faster!

For next steps, we recommend learning about how and for which uses cases companies are using features stores with Redis for the online store (Wix, Swiggy, Comcast, Zomato, AT&T, DoorDash, iFood, Uber), and specifically how they’re using Feast with Redis (Gojek, Udaan, Robinhood). We also recommend reading about the new Azure Feature Store for Feast and checking out the quick-start tutorials on Azure GitHub repo.

We hope you’ve enjoyed this introduction to using Feast with Redis. We’ll be posting more resources soon, but if you have any feedback or questions, please don’t hesitate to contact us.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.