Blog

Taking In-Memory NoSQL to the Next Level

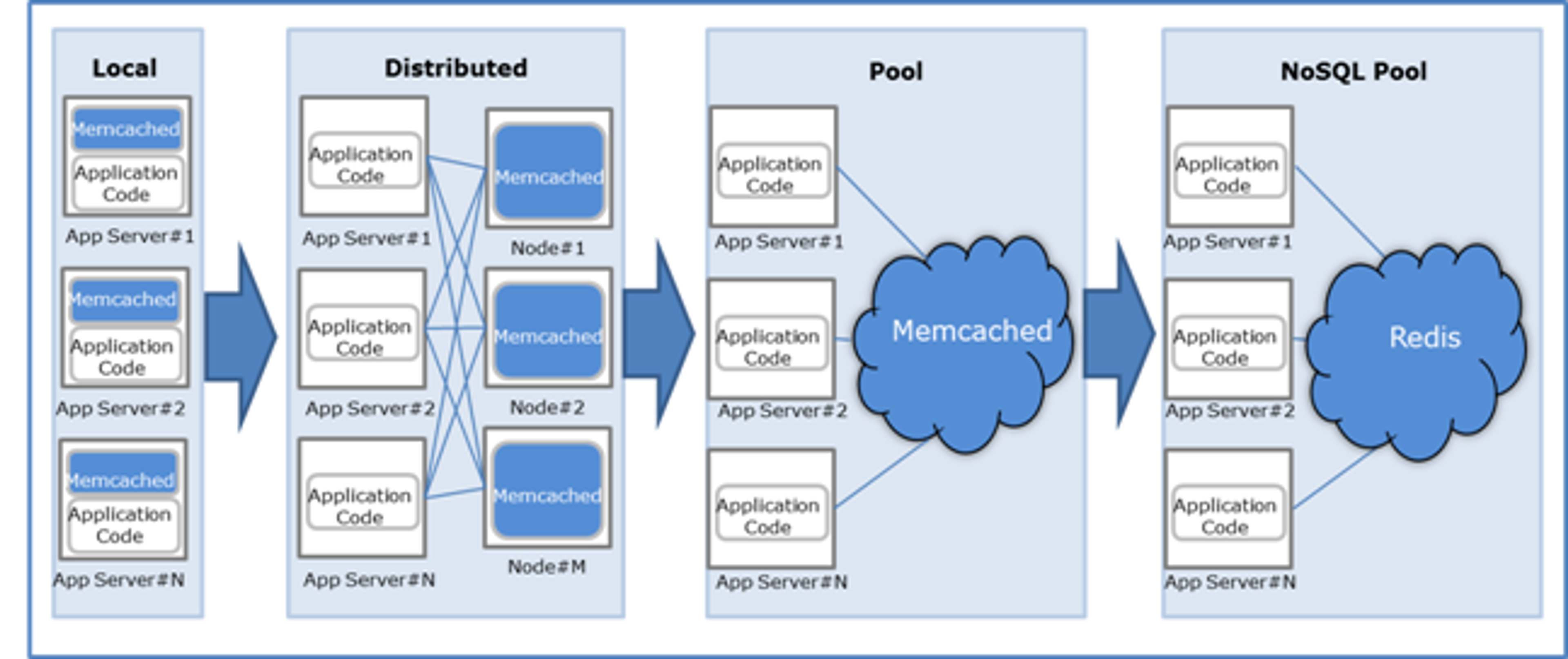

We are launching today the first in-memory NoSQL cloud, which will change the way people use Memcached and Redis. This is a great opportunity to examine the state of these RAM-based data stores and suggest a new, highly-efficient way of operating them in the cloud. Memcached and Redis are being increasingly adopted by today’s web-applications, and are being used to scale-out their data-tier and significantly improve application performance (in many cases improvement is x10 over standard RDBMS implementation). However, cloud-computing has created new challenges in the way scaling and application availability should be handled and using Memcached and Redis in their simple form may not be enough to cope with these challenges. Memcached It’s no secret Memcached does wonders for websites that need to quickly serve up dynamic content to a rapidly growing number of users. Facebook, Twitter, Amazon and YouTube, are heavily relying on Memcached to help them scale out; Facebook handles millions of queries per second with Memcached. But Memcached is not just for giants. Any website concerned with response time and user based growth should consider Memcached for boosting its database performance. That’s why over 70% of all web companies, the majority of which are hosted on public and private clouds, currently use Memcached. Local Memcached is the simplest and fastest caching method because you cache the data in the same memory as the application code. Need to render a drop-down list faster? Read the list from the database once, and cache it in a Memcached HashMap. Need to avoid the performance-sapping disk trashing of an SQL call to repeatedly render a user’s personalized Web page? Cache the user profile and the rendered page fragments in the user session. Although local caching is fine for web applications that run on one or two application servers, it simply isn’t good enough when the data is too big to fit in the application server memory space, or when the cached data is updated and shared by users across multiple application servers and user requests. In such cases user sessions, are not bound to a particular application server. Using local caching under these conditions may end up providing a low hit-ratio and poor application performance. Distributed Memcached tends to improve local caching by enabling multiple application servers to share the same cache cluster. Although the Memcached client and server codes are rather simple to deploy and use, Distributed Memcached suffers from several inherent deficiencies:

- Lack of high-availability – when a Memcached server goes down the application’s performance suffers as all data queries are now addressed to the RDBMS, which is providing a much slower response time. When the problem is fixed, it could take between a few hours to several days until the recovered server becomes “hot” with updated objects and fully effective again. In more severe case, where session data is stored in Memcached without persistent storage, losing a Memcached server may cause forced logout of users or flush of their shopping carts (in ecommerce sites).

- Failure hassle – the operator needs to set all clients for the replacement server and wait for it to “warm-up”. Operators sometimes add temporary slave servers to their RDBMS, for offloading their Master server, until their Memcached recovers.

- Scaling hassle – when the application dataset grows beyond the current Memcached resource capacity, the operator needs to scale out by adding more servers to the Memcached tier. However, it is not always clear when exactly this point has been reached and many operators scale out in a rush only after noticing degradation in their application’s performance.

- Scaling impact on performance – scaling out (or in) Memcached typically causes partial or entire loss of the cached dataset, resulting, again, in degradation of the application’s performance.

- Operating Memcached efficiently requires manpower – to monitor, optimize and scale when required. In many web companies these tasks are carried out by expensive developers or devops.

Amazon has tried to simplify the use of Memcached by offering ElastiCache, a cloud-based value-added service, where the user does not have to install Memcached servers but rather rent VMs (instances) pre-loaded with Memcached (at a cost higher than plain instances). However, ElastiCache has not offered a solution for any of the Memcached deficiencies mentioned above. Furthermore, ElastiCache scales-out by adding a complete EC2 instance to the user’s cluster, which is a waste of $$ for users who only require one or two more GBs of Memcached. With this model ElastiCache misses on delivery of the true promise of cloud computing – “consume and pay only for what you really need” (same as for electricity, water and gas). Redis Redis an open source, key-value, in-memory, NoSQL database began ramping-up in 2009 and is now used by Instagram, Pinterest, Digg, Github, flickr, Craigslist and many others and has an active open source community, sponsored by VMware. Redis can be used as an enhanced caching system alongside RDBMS, or as a standalone database. Redis provides a complete new set of data-types built specifically for serving modern web applications in an ultra-fast and more efficient way. It solves some of the Memcached deficiencies, especially when it comes to high availability, by providing replication capabilities and persistent storage. However, it still suffers from the following drawbacks:

- Failure hassle – There is no auto-fail-over mechanism; when a server goes down, the operator still needs to activate a replica or build a replica from persistent storage.

- Scalability – Redis is still limited to a single master server and although cluster management capability is being developed, it probably won’t be simple to implement and manage and will not support all Redis commands, making it incompatible with existing deployments.

- Operations – Building a robust Redis system requires strong domain expertise in Redis replication and data persistence nuances and building a Redis cluster will be rather complex.

A new cloud service changes the way people use Memcached and Redis Imagine connecting to an infinite pool of RAM memory and drawing as much Memcached or Redis memory you need at any given time, without ever worrying about scalability, high-availability, performance, data security and operational issues; and all this, with the click of a button (ok, a few buttons). Imagine paying only for GBs used rather than for full VMs and at a rate similar to what you pay your cloud vendor for plain instances. Welcome to the Garantia Data In-Memory NoSQL Cloud! By In-Memory NoSQL Cloud I refer to an online, cloud-based, in-memory NoSQL data-store service that offloads the burden of operating, monitoring, handling failures and scaling Memcached or Redis from the application operator’s shoulders. Here are my top 6 favorite features of such service, now offered by Garantia Data:

- Simplicity – Operators will no longer need to configure and maintain nodes and clusters. The standard Memcached/Redis clients are set for the service DNS and from this moment on, all operational issues are automatically taken care of by the service.

- Infinite scalability – The service provides an infinite pool of memory with true auto-scaling (out or in) to the precise size of the user’s dataset. Operators don’t need to monitor eviction rates or performance degradation in order to trigger scale-out; the system constantly monitors those and adjusts the user’s memory size to meet performance thresholds.

- High availability – Built-in automatic failover makes sure data is guaranteed under all circumstances. Local persistence storage of the user’s entire dataset is provided by default, whereas in-memory replication can be configured at a mouse click. In addition, there is no data loss whatsoever when scaling out or in.

- Improved application performance – Response time is optimized through consistent monitoring and scaling of the user’s memory. Several techniques that efficiently evict unused and expired objects are employed to significantly improve the hit-ratio.

- Data security – For those operators who are concerned with hosting their dataset in a shared service environment, Garantia Data has full encryption of the entire dataset as a key element of its service.

- Cost savings –Garantia Data frees developers from handling data integrity, scaling, high availability and Memcached/Redis version compliance issues. Additional savings are achieved by paying only for GBs consumed rather than for complete VMs (instances). The service follows the true spirit of cloud computing enabling memory consumption to be paid for much like electricity, water or gas, so you “only pay for what you really consume”.

We have recently concluded a closed beta trial with 20 participating companies where all these features were extensively tested and verified, – and it worked fine! So this is not a concept anymore, it’s real and it’s going to change the way people use Memcached and Redis! Am I excited today? Absolutely so!

Fig 1– DB Caching Evolution

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.