Stop testing, start deploying your AI apps. See how with MIT Technology Review’s latest research.

Download now

e-Book

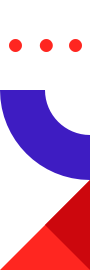

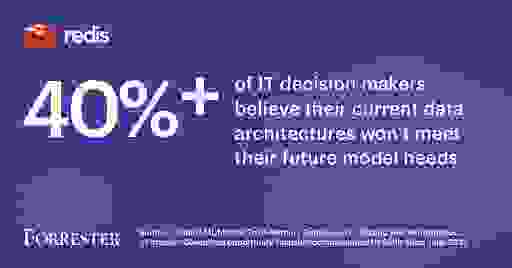

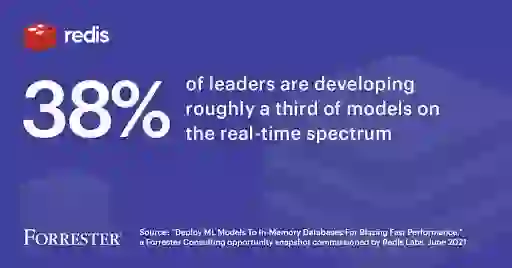

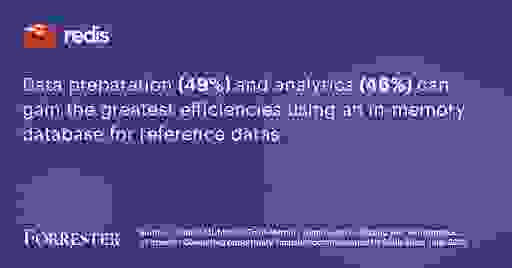

Redis Labs commissioned Forrester Consulting to survey IT decision makers responsible for ML/AI operations strategy. The study reveals that IT decision makers believe their current data architectures won’t meet future model inferencing challenges. There is a need for a modern AI infrastructure that can accelerate the ML lifecycle, improve interaction between data scientists and ML engineers, and above all, improve accuracy of ML.

There are several components to this modern AI infrastructure, and Redis plays a key role in the low-latency storage and serving of online features.

Some of the key insights from this study include:

Reuse of images in this report must link to this page as the source

Reuse of images must link to this page as the source

Source: “Deploy ML Models To In-Memory Databases For Blazing Fast Performance,” a Forrester Consulting opportunity snapshot commissioned by Redis Labs, June 2021

Feature store is becoming an important component in any ML/AI architecture today. A feature store allows you to build and manage features for your machine learning training phase (offline feature store) and inference phase (online feature store) to ensure the highest model quality. In this RedisConf 2021 session, you will learn about the importance of the feature store in your ML/AI stack, and how you can better leverage Redis and Redis Enterprise to improve the quality of your models and accelerate your AI/ML inferencing.

Market demands and technological advances have made artificial intelligence (AI) an attractive and exciting new field to explore, but it’s still not readily accessible for most organizations. There are many data challenges for leaders working to harness AI’s potential, mitigate its downsides, and empower their teams to use these new tools.