Blog

Building a RAG application with Redis and Spring AI

Vector databases frequently act as memory for AI apps. This is especially true for those powered by large language models (LLMs). Vector databases allow you to perform semantic search, which provides relevant context for prompting the LLM.

Until recently, there weren’t many options for building AI apps with Spring and Redis. Now, Redis has gained traction as a high-performance vector database. And the Spring community introduced a new project called Spring AI, which aims to simplify the development of AI-powered apps, including those that leverage vector databases.

Let’s see how we can build a Spring AI app that uses Redis as its vector database, focusing on implementing a Retrieval Augmented Generation (RAG) workflow.

Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) is a technique used to integrate data with AI models. In a RAG workflow, the first step involves loading data into a vector database, such as Redis. When a user query is received, the vector database retrieves a set of documents similar to the query. These docs then serve as the context for the user’s question and are used in conjunction with the user’s query to generate a response, typically through an AI model.

In this example, we’ll use a dataset containing information about beers, including attributes such as name, Alcohol By Volume (ABV), International Bitterness Units (IBU), and a description for each beer. This dataset will be loaded into Redis to demonstrate the RAG workflow.

Code and Dependencies

You can find all code for the Spring AI and Redis demo on Github.

This project makes use of the usual Spring Boot starter dependencies for web apps as well as Azure OpenAI and Spring AI Redis.

Data Load

The data we will use for our app consists of JSON documents providing information about beers. Each doc has the following structure:

To load this beer dataset into Redis, we will use the RagDataLoader class. This class contains a run method that is executed at app startup. Within this method, we use a JsonReader to parse the dataset and then insert the documents into Redis using the autowired VectorStore.

What we have at this point is a dataset of about 22,000 beers with their corresponding embeddings.

RAG Service

The RagService class implements the RAG workflow. When a user prompt is received, the retrieve method is called, which performs the following steps:

- Computes the vector of the user prompt

- Queries the Redis database to retrieve the most relevant documents

- Constructs a prompt using the retrieved documents and the user prompt

- Calls a ChatClient with the prompt to generate a response

Controller

Now that we have implemented our RAG service, we can wrap it in a HTTP endpoint.

The RagController class exposes the service as a POST endpoint:

User Interface

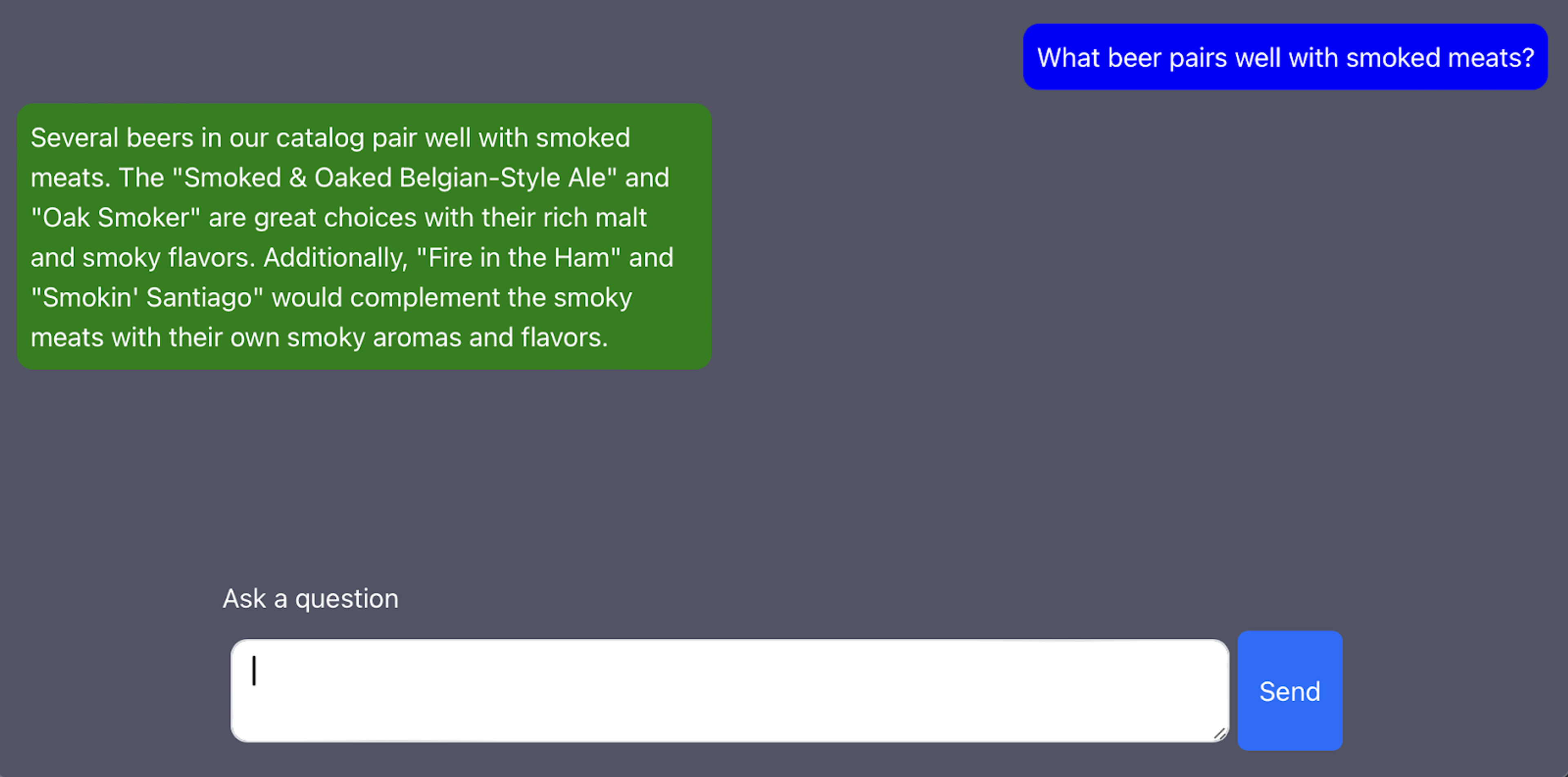

For the user interface, we have created a simple React front end that allows users to ask questions about beers. The front end interacts with the Spring back end by sending HTTP requests to the /chat/{chatId} endpoint and displaying the responses.

And done–with just a few classes, we have implemented a RAG application with Spring AI and Redis.

To take the next step, we encourage you to check out the example code on Github. And if you have any questions or issues, don’t hesitate to open a ticket. Combining Redis’ speed and ease of use with the handy abstractions provided by Spring AI makes it so much easier for Java developers to build responsive AI applications with Spring. We can’t wait to see what you build.

Related Resources

- The code presented in this article is available on GitHub.

- The documentation for Spring AI Redis is available here.

- For more information about Spring AI, visit the project homepage.

- Learn more about the Redis vector search API in the Redis vector documentation.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.