Blog

Building LLM Applications with Kernel Memory and Redis

Redis now integrates with Kernel Memory, allowing any dev to build high-performance AI apps with Semantic Kernel.

Semantic Kernel is Microsoft’s developer toolkit for integrating LLMs into your apps. You can think of Semantic Kernel as a kind of operating system, where the LLM is the CPU, the LLM’s context window is the L1 cache, and your vector store is what’s in RAM. Kernel Memory is a component project of Semantic Kernel that acts as the memory-controller, and this is where Redis steps in, acting as the physical memory.

As an AI service, Kernel Memory lets you index and retrieve unstructured multimodal data. You can use KM to easily implement common LLM design patterns such as retrieval-augmented generation (RAG). Redis is a natural choice as the back end for Kernel Memory when your apps require high performance and reliability.

In this post, we’ll see how easily we can build an AI chat app using Semantic Kernel and Redis.

Modes of Operation

There are two ways you can run Kernel Memory:

- Directly within your app

- As a standalone service

If you run Kernel Memory directly within your app, you’re limiting yourself to working within the .NET ecosystem (no great issue for this .NET dev), but the beauty of running Kernel Memory as a standalone service is that it can be addressed by any language that can make HTTP requests.

Running the sample

To illustrate the platform neutrality of Kernel Memory, we’ve provided two samples, one in Python and one in .NET. In practice, any language that can run an HTTP server can easily be substituted to run with this example.

Clone the sample

To clone the .NET sample, run the following:

To clone the python sample, run:

Running the samples

These example apps rely on OpenAI’s completion and embedding APIs. To start, obtain an OpenAI API key. Then pass the API key to docker-compose. This will start:

- The front-end’s service (written in react)

- The back-end’s web service—written in .NET or Python, depending on the repository you are using

- Redis Stack

- Kernel Memory as its own standalone service

Add data to Kernel Memory

We have a pre-built dataset of beer information that we can add to Kernel Memory (and ask it for recommendations). To add that dataset, just run:

Accessing the front end

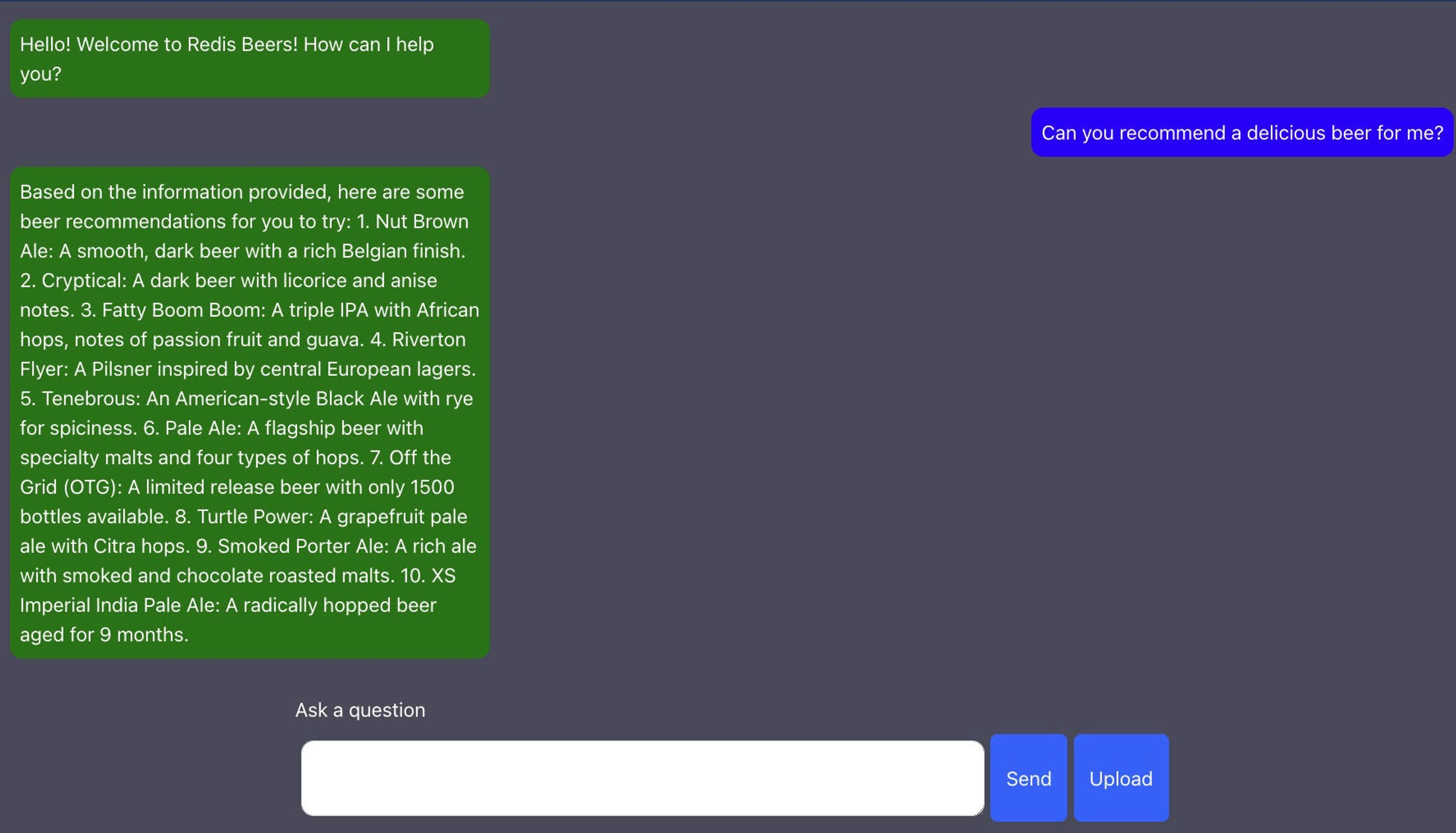

To access the front end, navigate to http://localhost:3000., From there, you can ask the bot for recommendations:

How does this all work?

There are several parts of this demo:

- Redis

- Our React front end, which provides a simple chat interface

- Kernel Memory

- The back end, which maintains the chat session, searches Kernel Memory, and crafts the prompts for the LLM

Redis has vector database enabled, which is a feature provided by Redis, Redis Cloud, and Azure Cache for Redis (Enterprise Tier). Any of these options will work. Our front end is a simple React app. Kernel Memory requires some configuration, which we’ll cover below. Plugins and HTTP calls drive our back end, so we’ll cover that, too.

Configuring Kernel Memory

To get Kernel Memory up and running with Redis, you need to make some minor updates to the

Notice in this file that there is a Retrieval object:

This tells the retriever which Embedding Generator to use (OpenAI) and which MemoryDbType to use (Redis).

There’s also a Redis Object:

This Redis object maps out the important configuration parameters for the Redis connector in Kernel Memory:

- The ConnectionString is a StackExchange.Redis connection string, which tells it how to connect to Redis.

- Tags tells Kernel Memory which possible tags you might want to filter on and provides optional separators for you to allow you to follow Redis Tag Semantics.

- AppPrefix lets you provide an app prefix for Kernel Memory, so you can have multiple Kernel Memory apps working with the same Redis Database.

Interacting with Kernel Memory

There are fundamentally two things we want to do with Kernel Memory:

- Add Memories

- Query Memories

To be sure, Kernel Memory has a lot more functionality, including information extraction, partitioning, pipelining, embedding generation, summarization, and more, but we’ve configured all of that already and they’re all in service of those two goals.

To interact with Kernel Memory, you use the HTTP interface. There are a few front ends for this interface:

- Within a Semantic Kernel Plugin

- With the IKernelMemory interface (in .NET)

- Directly with the Http endpoints

Adding documents to Kernel Memory

To upload a document, invoke one of the IKernelMemory’s Import functions. In the .NET example, we used a simple Document import with a file stream, but you can import web pages and text as well:

In Python, there’s no KernelMemory client (yet), but you can simply use the Upload endpoint:

Querying Kernel Memory

To query Kernel Memory, you can use either the Search or the Ask endpoint. The search endpoint does a search of the index, and returns the most relevant documents to you, whereas the ask endpoint performs the search and then pipes the results into an LLM.

Invoke from a prompt

The Kernel Memory .NET client comes with its own plugin, which allows you to invoke it from a prompt:

The prompt above will summarize a response to Kernel Memory’s ask endpoint given the provided system prompt (and the intent generated from the chat history and the most recent message earlier in the pipeline). To get the plugin itself working, you’ll need to add the Memory Plugin to the kernel. The following are clipped portions from the Program.cs of the .NET project:

This injects Kernel Memory into the DI container, then adds Semantic Kernel, and finally adds the MemoryPlugin from the Kernel Memory Client to Semantic Kernel.

Invoking from an HTTP Endpoint

Naturally, you can also invoke these query functions from an HTTP endpoint. In the Python sample, we just invoke the search endpoint (and then format the results):

These memories can then be fed into our LLM to give it more context when answering a question.

Wrapping Up

Semantic Kernel provides a straightforward way of managing the building blocks of the semantic computer, and Kernel Memory provides an intuitive and flexible way to interface with the memory layer of the Semantic Kernel. As we’ve observed here, integrating Kernel Memory with Redis is as simple as a couple of lines in a config file.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.