Blog

Introducing Haink: Redis’ applied AI agent

Meet Haink, Redis’ always-on AI teammate

Across industries, teams are turning to AI agents to move faster and work smarter. At Redis, we built one from scratch to show what is possible with using Redis as the foundation.

Meet Haink, a purpose-built AI teammate from the Redis Applied AI team. Haink is available around the clock to help Redis teammates understand customer architectures, navigate the AI market, and identify where Redis fits best for the win. What makes Haink truly unique is that it is not a demo or prototype. It is a production-grade agent that Redis engineers, solutions architects, and field teams use every day.

Why we built Haink

Our field and solutions engineering teams often face a challenge: keeping up with the rapidly changing AI landscape while helping customers see Redis’ unique value within it. The world of AI evolves faster than models can be retrained, which means static systems often fall behind as new techniques, architectures, and best practices emerge. Redis is a core component in many AI deployments, from real-time caching to vector similarity search, yet mapping that value to a customer’s specific environment takes time.

We built Haink as an “Nth team member” to solve that problem. Haink codifies Redis’ collective expertise, from technical blogs and code examples to real-world deployments, and makes that knowledge instantly accessible to everyone at Redis. It is also prompted to follow our teams’ preferred code style and technical standards, ensuring consistency in how Redis concepts and recommendations are shared across the company. In doing so, Haink helps scale our knowledge, allowing others to interact with and learn from it in real time.

What Haink can do

Haink acts like a Redis expert who is always available. You can ask it for code examples, architectural recommendations, or even technology comparisons.

For example, you might message Haink in Slack and say, “Show me a code example for semantic caching with RedisVL.” Within seconds, Haink searches Redis’ internal knowledge base, finds the relevant example, and returns working code that the Applied AI team has already tested.

Haink can also handle reasoning-based questions, such as “When is Redis better than Pinecone?” In those cases, it analyzes context from Redis materials and explains the architectural tradeoffs, including where Redis’ unified data model or low latency would deliver more value.

Haink is not limited to summarization or retrieval. It can perform complex computations on the fly. For example, you can pass Haink an example JSON and an estimated number of documents, and it will run a custom tool that calculates the number of shards a customer workload requires. This capability connects reasoning with real-time computation, showing how Redis can power logic-based operations in addition to AI reasoning.

Like any good teammate, Haink continues to learn. Every conversation is saved as a regression test that helps our engineering teams measure accuracy and reduce hallucinations over time. If Haink ever gets something wrong, that interaction becomes a test case for improving future versions.

How Haink works behind the scenes

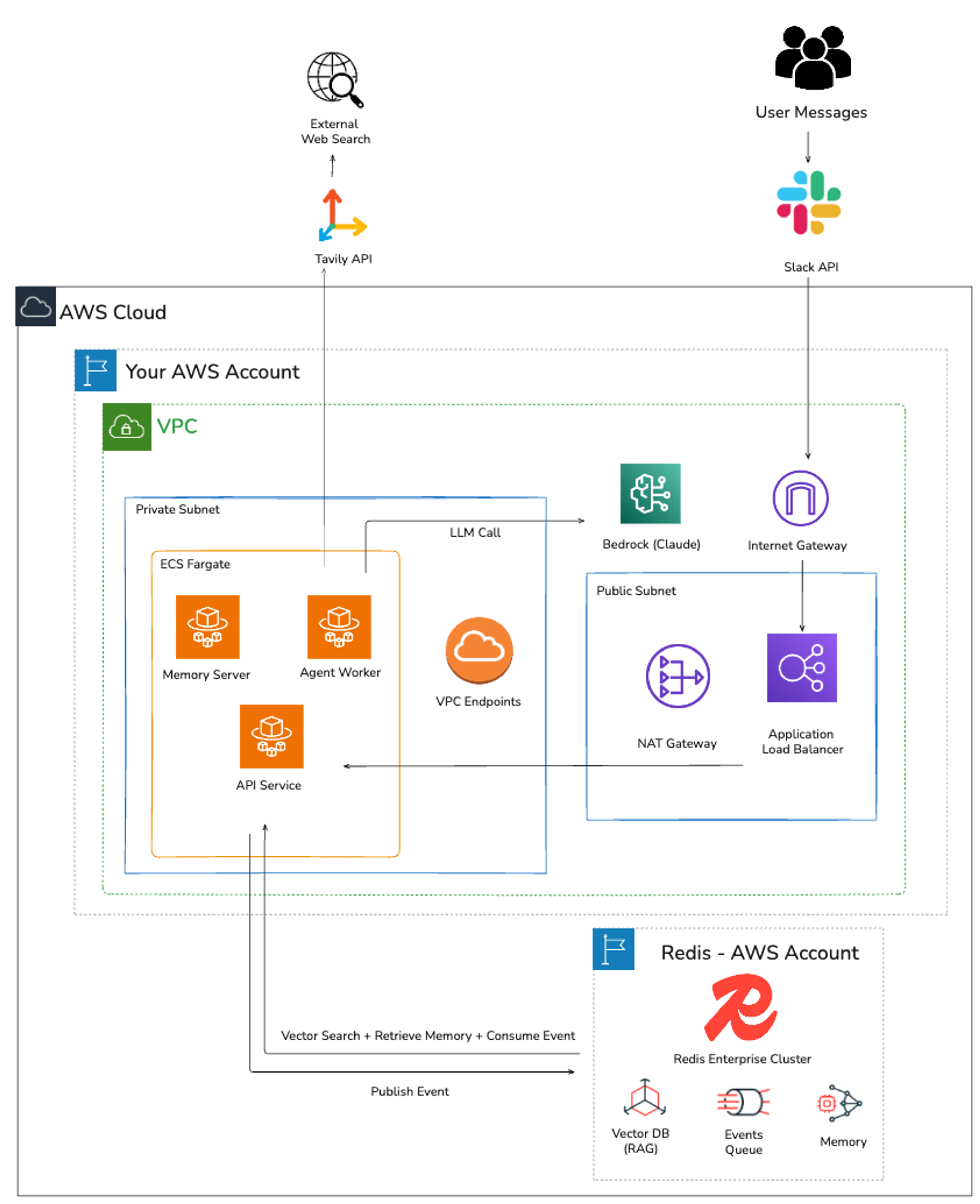

Haink lives in Slack, but the system running it is complex and entirely homegrown. Built from scratch using Redis and AWS, it shows how a real-time data platform, a managed model service, and scalable infrastructure can work together to power enterprise-grade AI agents.

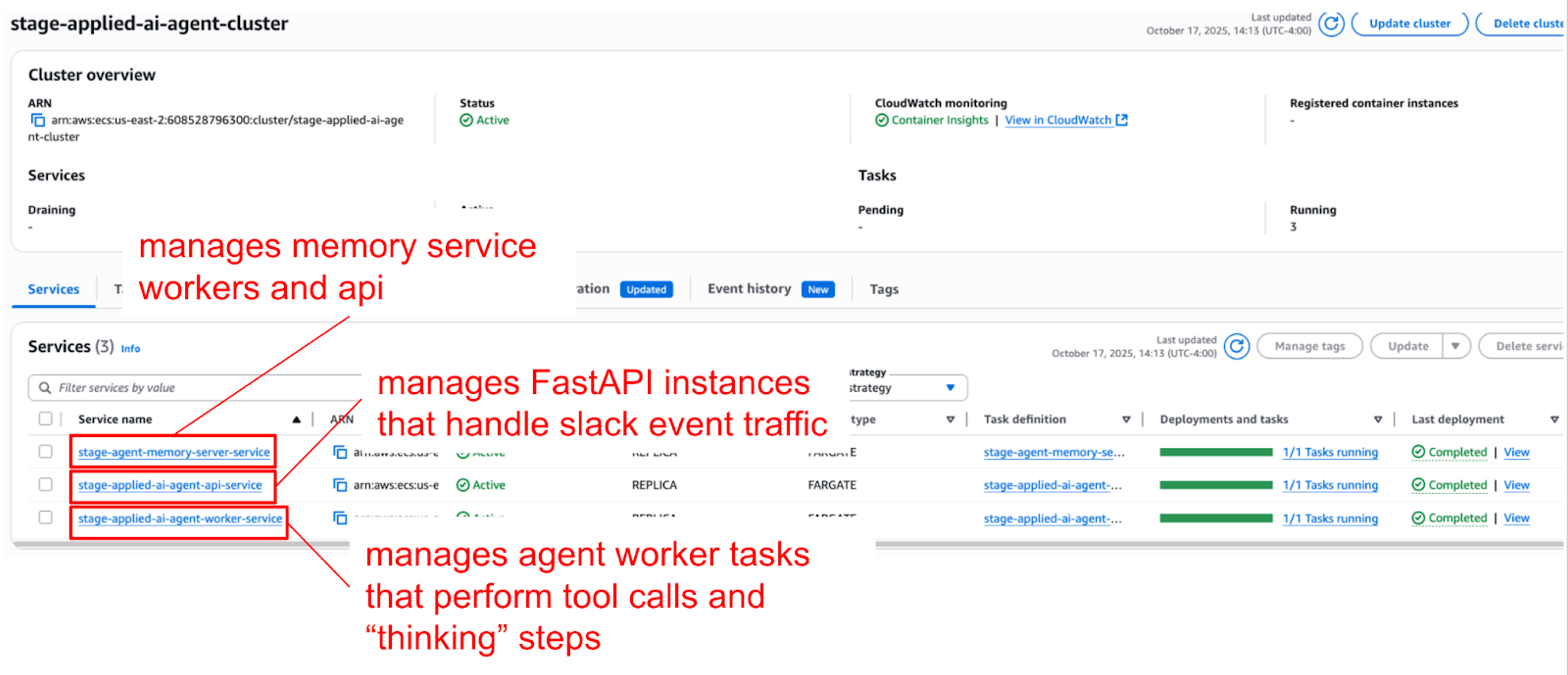

The architecture is built entirely within AWS, combining ECS Fargate for orchestration, Redis Cloud for real-time data and memory, and AWS Bedrock for large language model inference. ECS handles API routing, background processing, and scaling, while Bedrock provides secure, managed access to models like Claude. Together, they enable a fully event-driven agent workflow that can process Slack messages, retrieve context from Redis, and generate accurate, context-aware answers in real time.

The flow is asynchronous and optimized for performance:

- A user mentions Haink in Slack. Slack sends an HTTPS POST request to an Application Load Balancer, which routes it to the API service.

- The API service queues the request into Redis Streams.

- A worker service polls Redis Streams, consumes the task, and retrieves the conversation state from Redis.

- The worker searches Redis vector data for relevant documents and, if needed, performs a web search using the Tavily API.

- The worker then calls Claude on AWS Bedrock, sending the retrieved context for reasoning and response generation.

- Bedrock returns the LLM output, which the worker writes back to Redis to update the conversation state.

- Finally, the worker posts the response to Slack, often including references and source links.

Figure 1: Haink system architecture combining Redis for real-time data and AWS Bedrock for managed LLM inference.

Each component plays a distinct role:

- Slack API handles user interactions and triggers the workflow.

- ECS Fargate runs containerized services, including the API, agent worker, and memory server.

- AWS Bedrock (Claude) provides scalable, managed access to foundation models for reasoning and text generation.

- Redis Cloud, deployed in AWS, stores memory, manages event queues, and powers vector search for retrieval-augmented generation (RAG).

Figure 2: Haink deployment on AWS ECS cluster integrating API, worker, and memory services.

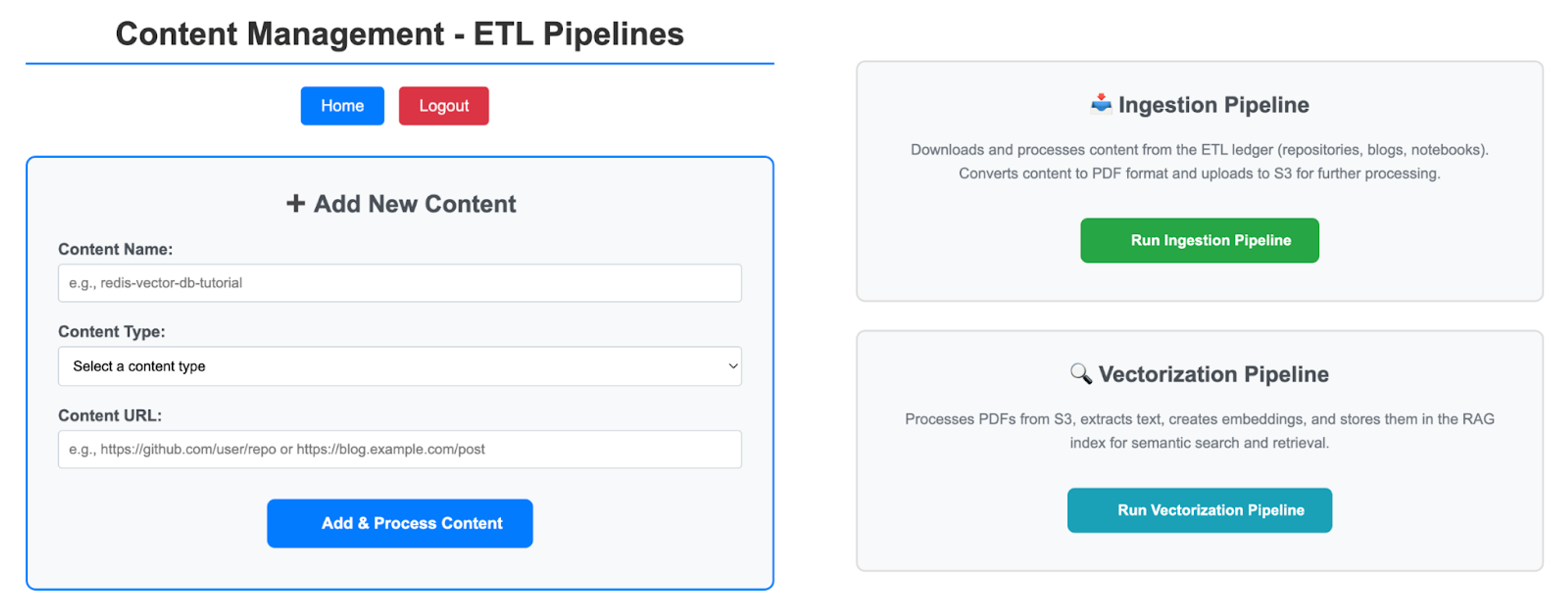

In addition to its runtime environment, Haink includes an internal content management panel that powers its knowledge ingestion pipeline. This interface tracks each piece of content as it moves through stages such as ingestion, vectorization, and completion, ensuring Redis knowledge sources remain current and ready for retrieval.

Figure 3: Haink content management admin panel showing ingestion and vectorization pipelines for Redis knowledge sources.

This architecture makes Haink modular, scalable, and production-ready. Redis enables sub-millisecond access to memory and vector data, while Bedrock delivers managed and compliant large language model inference. Together, they form a real-time, intelligent agent system that is deployable at scale.

The role of memory

Memory is what makes Haink feel like a teammate rather than a tool. Using Redis’ Agent Memory Server, Haink stores both short-term and long-term context.

Short-term, or “working memory,” tracks recent exchanges so Haink can maintain a natural multi-turn conversation. Over time, important information is promoted to long-term memory, allowing Haink to recall previous sessions and recognize patterns in interactions.

This approach reflects how human memory works while demonstrating Redis’ strength as the memory layer for intelligent agents. With its speed, persistence, and low latency, Redis is ideal for building AI systems that need to think and recall information in real time.

Why Haink matters

Haink is more than an internal utility. It is a living reference architecture that shows what is possible when you build agents on Redis.

For Redis account executives and solutions architects, Haink acts as a 24/7 expert, helping them prepare for customer meetings with architecture examples and Redis-specific AI use cases. For the wider AI community, Haink is proof that it is possible to build a capable, production-grade AI agent using Redis, Python, and sound engineering practices.

The Applied AI team uses Haink every day, gathering feedback, refining its reasoning, and improving its capabilities. This process of using our own technology internally, often called dogfooding, ensures that Haink continues to evolve while advancing Redis’ own real-time AI platform.

What’s next for Haink

The team behind Haink is already experimenting with new capabilities. Work is underway to add multimodal inputs, allowing Haink to understand and respond to not just text but also code snippets and other data types. Integrations with enterprise tools such as Salesforce via Glean are also planned, which will expand Haink’s context and make its responses even more relevant.

Future updates will improve the evaluation and feedback systems so Haink can continue learning from every interaction. The more Redis teams use Haink, the smarter and more contextual it will become.

Building AI teammates with Redis

Haink represents what Redis does best: enabling real-time, intelligent systems that learn, adapt, and scale. It is not simply a helpful Slackbot. It is proof of what is possible when large language models, vector search, and real-time memory come together on Redis. You can explore how Haink is built by visiting the open-source repo on GitHub.

Try Haink yourself. Share a customer scenario, describe their architecture, and ask where Redis fits. In seconds, you will see a thoughtful, context-aware response backed by Redis data and logic.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.