Blog

Redis vs Valkey for ElastiCache sprawl & resource efficiency

The problem of ElastiCache & Valkey sprawl

ElastiCache looks straightforward when you start small. Each workload is tied to its own cluster, so teams spin up new clusters as apps scale. Over time, this creates sprawl: dozens or even hundreds of clusters spread across accounts and environments.

Sprawl creates four main challenges:

- Operational overhead: More clusters to provision, patch, monitor, back-up, and test failover for, each with its own security and parameter groups

- Resource waste: Unused compute and memory trapped inside isolated clusters

- Higher cloud bills: Paying for capacity that cannot be shared or reused

- Right-sizing friction: Deployment-time choices like node type, shard count, and parameter groups are difficult to change later without risk, coordination, and downtime windows. Teams often keep suboptimal footprints rather than rework them, which compounds ElastiCache sprawl over time. On top of that, each cluster has to be sized for CPU, memory, and network I/O in advance, which usually means either running load tests or overestimating and overspending.

ElastiCache sprawl starts innocently enough. A team launches one cluster per app to keep things simple, but it doesn’t stay simple for long. Dev, staging, and prod come next. Traffic shifts and seasonality force capacity spikes, so teams stand up extra clusters. As apps evolve, teams isolate workloads with different requirements. They separate session data, caching, and streaming so they can scale independently. Then the footprint goes global. An app deployed in multiple regions needs local caches in those regions too, so the pattern multiplies across geographies.

Organizational sprawl then kicks in. Different business units provision their own clusters. A cluster they scaled for an event in 2024 is still running at that size because changing node types, shard counts, or parameter groups is low-priority and time-consuming. Consolidation keeps getting deferred, so yesterday’s deployment choices become today’s fixed costs. The result is a fragmented fleet with data siloes, soaring costs, and ongoing friction between dev and ops teams.

AWS’s switch from Redis to Valkey in ElastiCache doesn’t solve this problem. Valkey follows the same cluster-per-workload model, so users continue to face sprawl and the same right-sizing inertia as deployments grow.

How Redis reduces ElastiCache sprawl & improves efficiency

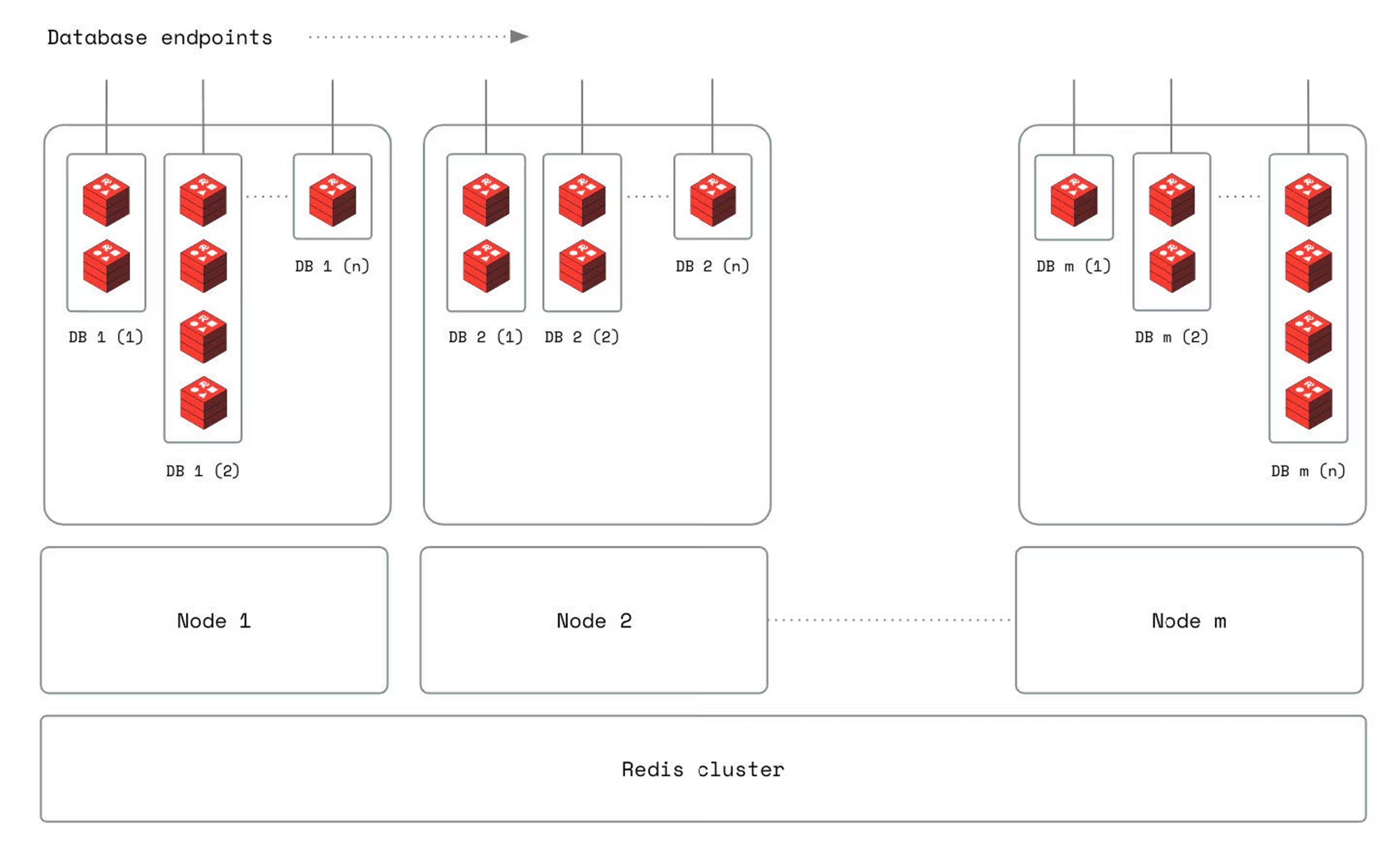

Redis was designed to solve this problem. Instead of requiring one cluster per workload, we let organizations run multiple isolated databases within a single cluster. That means:

- Centralized cluster management

- Fewer clusters to provision and manage

- Lower total overhead for devs and ops teams

- More efficient infrastructure usage

- Multi-tenancy with strong isolation at the database level

Risks of AWS’s Redis to Valkey shift

ElastiCache users also need to weigh the impact of AWS moving from Redis to Valkey. While Valkey maintains basic API compatibility with Redis 7, there are risks:

- Compatibility gaps: Valkey doesn’t support newer Redis versions that bring 150+ more commands and 8 more data structures. Not just that, Valkey lags behind Redis 8 and Redis 8.2 innovations such as hash field expiration, vector search, vector sets, wide ranging performance gains, and streams improvements.

- Innovation slowdown: In addition to the open source community, Redis is backed by a well-funded company solely invested in Redis with a clear roadmap. Valkey leans on community contributions, which come and go and are slower to deliver new capabilities.

- Lock-in risk: AWS optimizes ElastiCache for selling you more AWS infrastructure, not for advancing Valkey. The conflict of interest limits flexibility for organizations that want portability across clouds or hybrid environments.

For teams betting on ElastiCache, this means more clusters to manage today and uncertainty about compatibility tomorrow.

Strategic benefits of Redis’s architecture

Redis avoids this sprawl by combining efficiency with resilient operations, comprehensive security, and optimizations for your complex environments. We aim to make managing Redis as simple as using Redis.

Architects and developers gain:

- Resource efficiency: Multi-tenancy and centralized management

- Cutting-edge innovation: Redis Query Engine for secondary indexing and search, vector search for AI workloads, semantic caching, and agentic memory patterns

- Portability: A similar Redis experience across Redis Cloud and Redis Software, across clouds, and across deployment environments

For architects, this means simpler designs and lower costs. For devs and ops teams, it means fewer moving parts and faster delivery of new apps and services.

Conclusion

ElastiCache and Valkey force sprawl by requiring separate clusters for each workload. Redis reduces sprawl with multi-tenancy and centralized management, delivering better efficiency at scale. We continue to innovate beyond caching to support modern workloads, while AWS’s shift to Valkey leaves users with compatibility risks and more operational overhead.

This post is part of our Redis vs ElastiCache blog series. We also compare Redis with ElastiCache for cost effectiveness, AI and vector search, and multi-cloud flexibility. Explore the full series to get a complete view of your options.

Learn more at redis.io/compare/elasticache.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.