Blog

Redis as the engine behind real-time, intelligent chatbots

Chatbots are the most popular AI technology in businesses today. Almost 70% of companies already use them for customer service and internal use. For good reason: They’re transforming how businesses interact with customers, employees, and data.

In customer service, chatbots are automating high-volume inquiries and reducing response times from minutes to seconds—freeing up human agents to handle complex issues. In sales, chatbots deliver real-time product recommendations, pricing guidance, and quote generation.

Internally, they’re helping employees find answers instantly—from answering HR policy questions to searching technical docs or summarizing meeting notes. Across industries like financial services, healthcare, retail, and technology, chatbots have become essential tools for improving efficiency and delivering better user experiences.

The results speak for themselves: Organizations using AI-powered chatbots are saving up to 2.5 billion hours of employee time annually and boosting both productivity and customer satisfaction.

Why so many chatbot projects stall

While adoption is high, success isn't guaranteed. Getting a chatbot to work reliably in production is harder than it seems. Most teams run into the same four challenges:

- Performance latency: LLMs are powerful but often slow. And when users expect instant answers, every second of delay erodes trust.

- Lack of memory: Many bots can’t recall past interactions, making it impossible to have natural, multi-turn conversations.

- Escalating costs: Without caching or reuse, a high volume of LLM calls can lead to ballooning operational costs.

- Fragmented infrastructure: Multiple chatbot stacks across departments lead to duplicated effort and no unified governance.

How Redis helps build faster, more intelligent chatbots

Redis helps devs and businesses solve these problems by powering modern chatbot architectures with a combination of real-time speed, long- and short-term memory, semantic caching, and AI control gateways.

These capabilities make it possible to build chatbots that are production-grade from day one. Here’s what Redis brings to the table:

- The world’s fastest vector database for retrieval-augmented generation (RAG)

- Persistent long-term memory for contextual, multi-turn conversations

- Short-term memory to help background agents collaborate efficiently

- Semantic caching to reduce redundant LLM calls and cut costs by up to 90%

- AI gateways for observability, access control, and governance

And our customers are seeing measurable results. Asurion improved customer service response times by more than 50% using Redis-powered GenAI, while Relevance AI reduced query latency from two seconds to just ten milliseconds, dramatically improving UXchatbot—say.

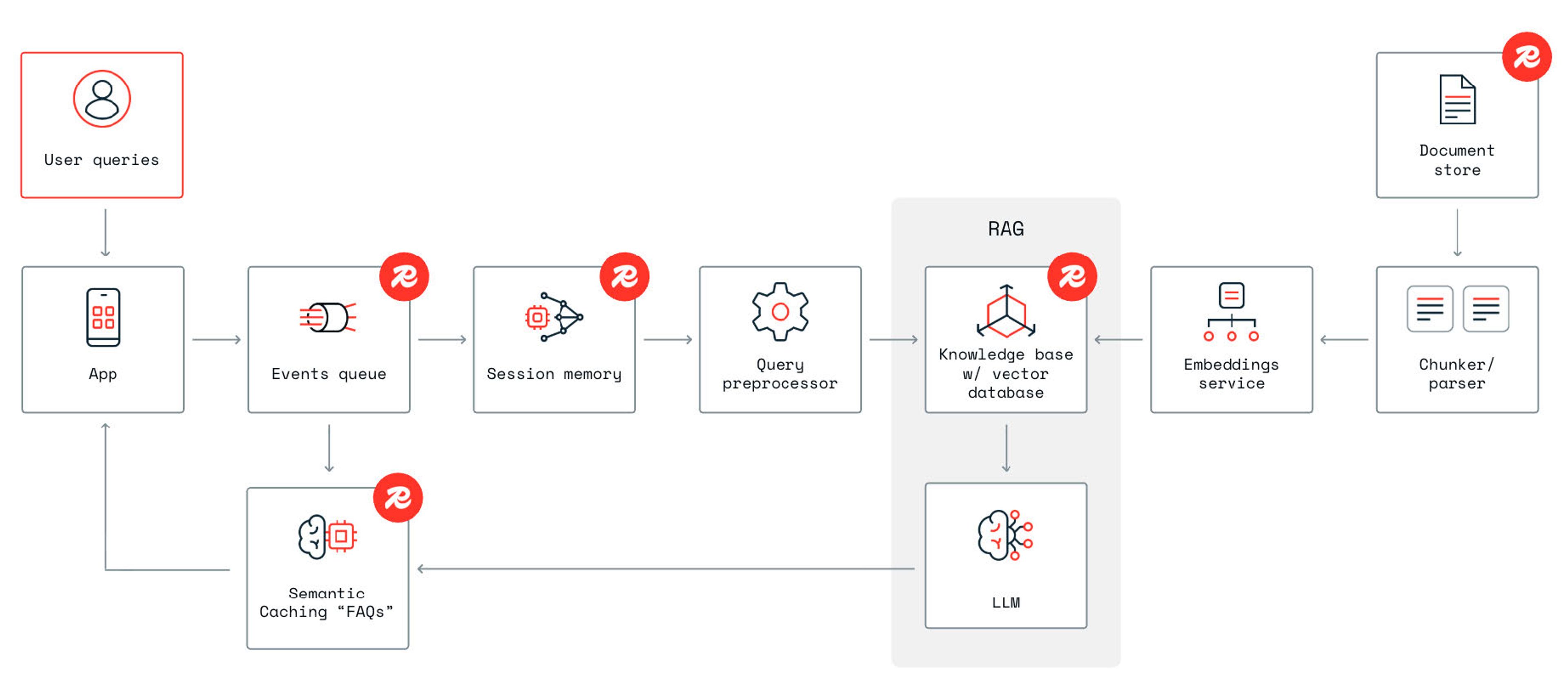

Inside a Redis-powered chatbot architecture

To understand how Redis fits in, let’s trace what happens behind the scenes when a user interacts with a chatbot — say, asking ChatGPT for a 2-day San Diego itinerary.

- User query ingestion: When a user submits a query, it first enters an event queue to ensure concurrency management and reliability at scale. The system then parses and embeds the query, breaking it into key concepts and converting them into vector embeddings. When a user asks for a 2-day San Diego itinerary, Redis springs into action behind the scenes.

- Vector retrieval with Redis: Redis searches for semantically relevant information—such as restaurants, parks, and travel blogs—from preprocessed content stored as embeddings.

- Semantic cache lookup: Before performing any new computation, Redis checks whether a similar query has already been answered. If a cached response exists, it serves the result instantly.

- Session memory retrieval: Redis retrieves prior conversation context (e.g., “traveling with kids”) to enrich the current prompt and maintain continuity.

- RAG pipeline: The query, retrieved documents, and session memory are combined into a single prompt for the LLM, ensuring grounded and context-aware responses.

- Response generation & storage: The LLM generates the final answer, while Redis caches it for future reuse and stores it to preserve session continuity.

Why choose Redis when building chatbots?

Redis is the fastest vector database

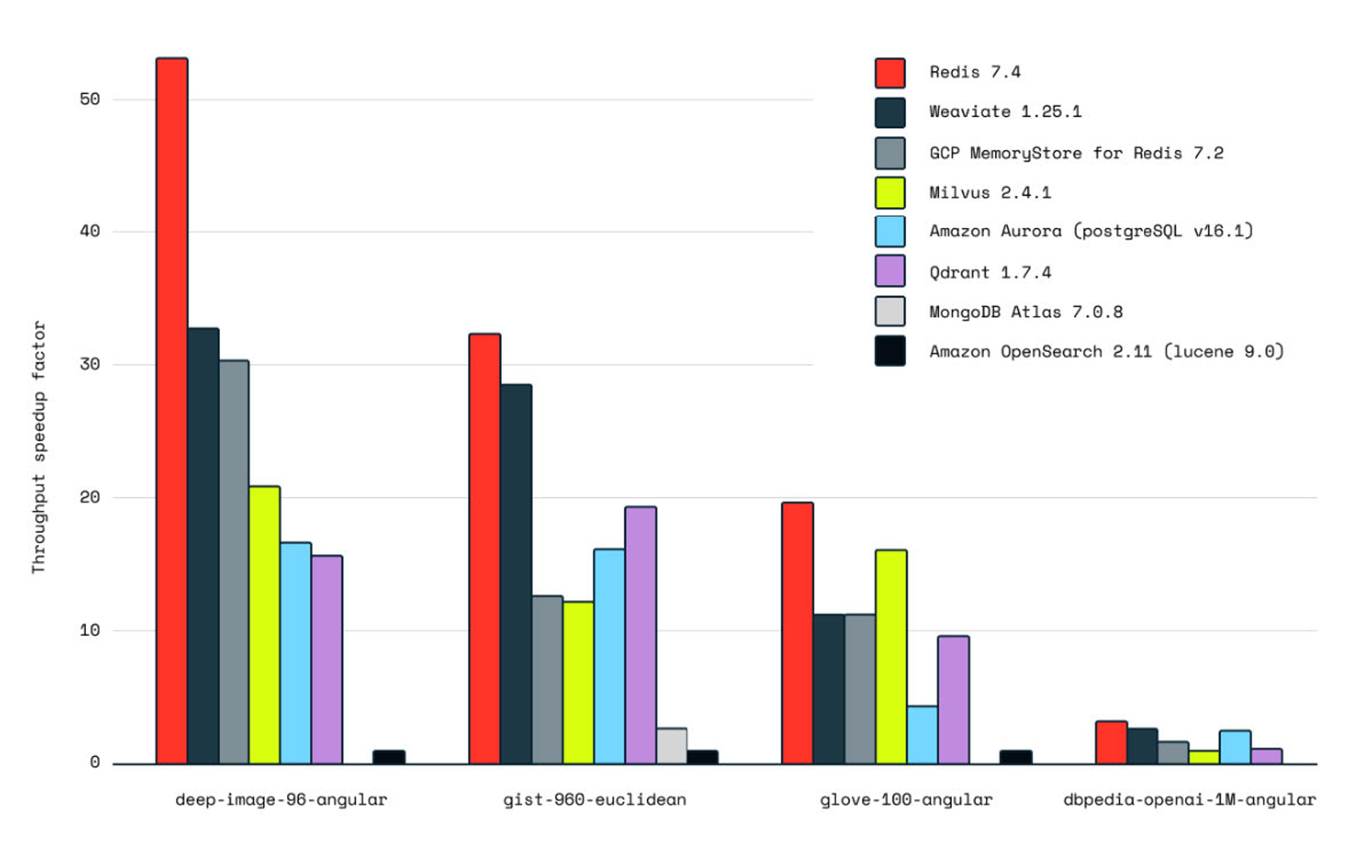

A vector database allows chatbots to understand meaning, not just keywords. Independent benchmarks show Redis outperforming other vector databases by up to 21×.

With Redis, a query like “update payment settings” will also match “change billing info”—thanks to vector embeddings. Redis’ hybrid search then combines vector (semantic) and keyword (exact) matching for both precision and flexibility.

That means chatbots can handle both “How do I update my profile?” and “Show me document ID #48392”—all with sub-millisecond latency.

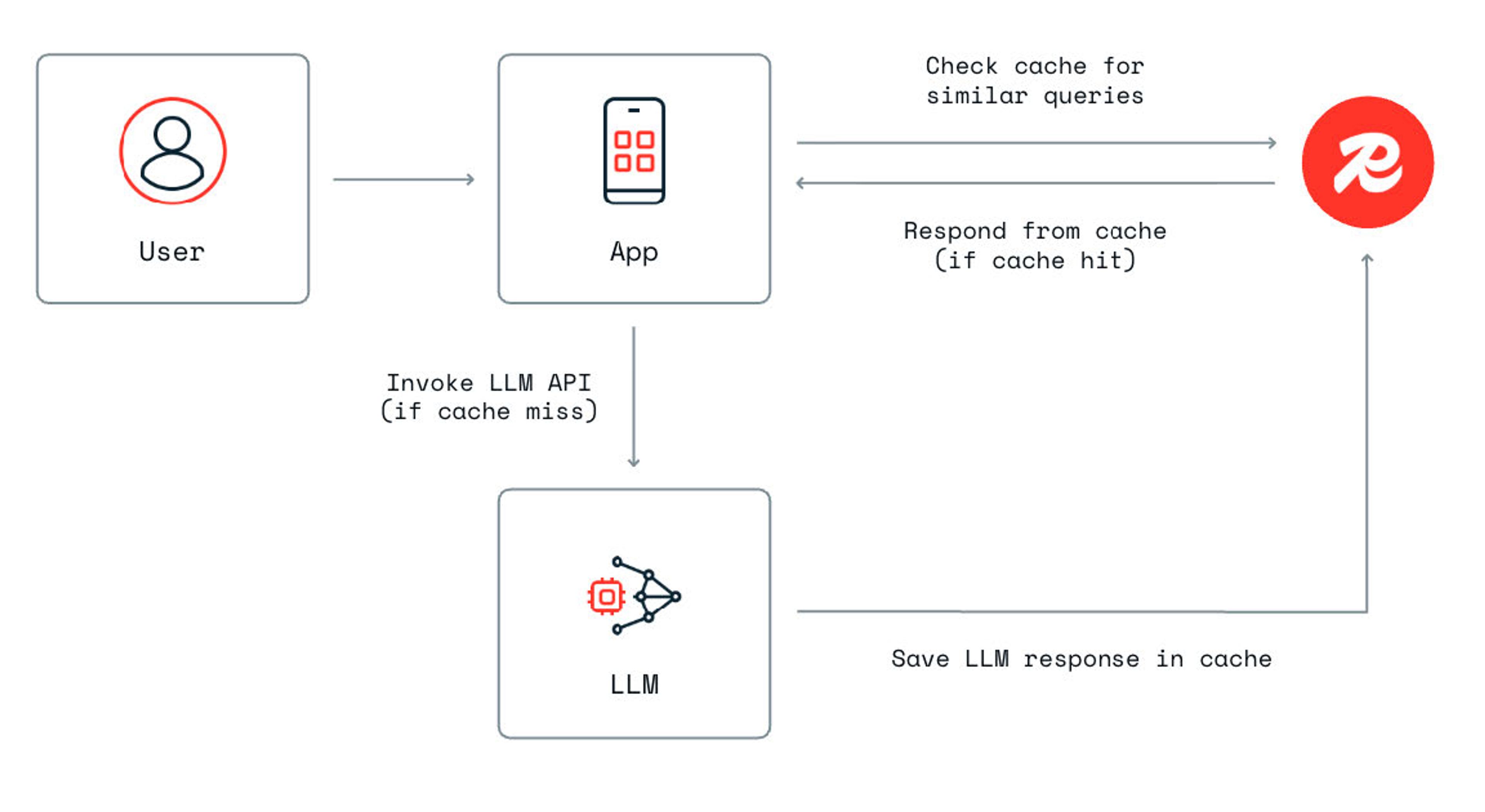

Semantic caching reduces costs and latency

Traditional caching only recognizes identical queries. Redis’ semantic caching identifies when two questions mean the same thing, even if phrased differently.

Example:

- “How do I reset my password?”

- “Can I change my login credentials?”

Redis recognizes they have identical intent and returns the cached answer immediately. This reduces response times by up to 15× and LLM costs by up to 90%.

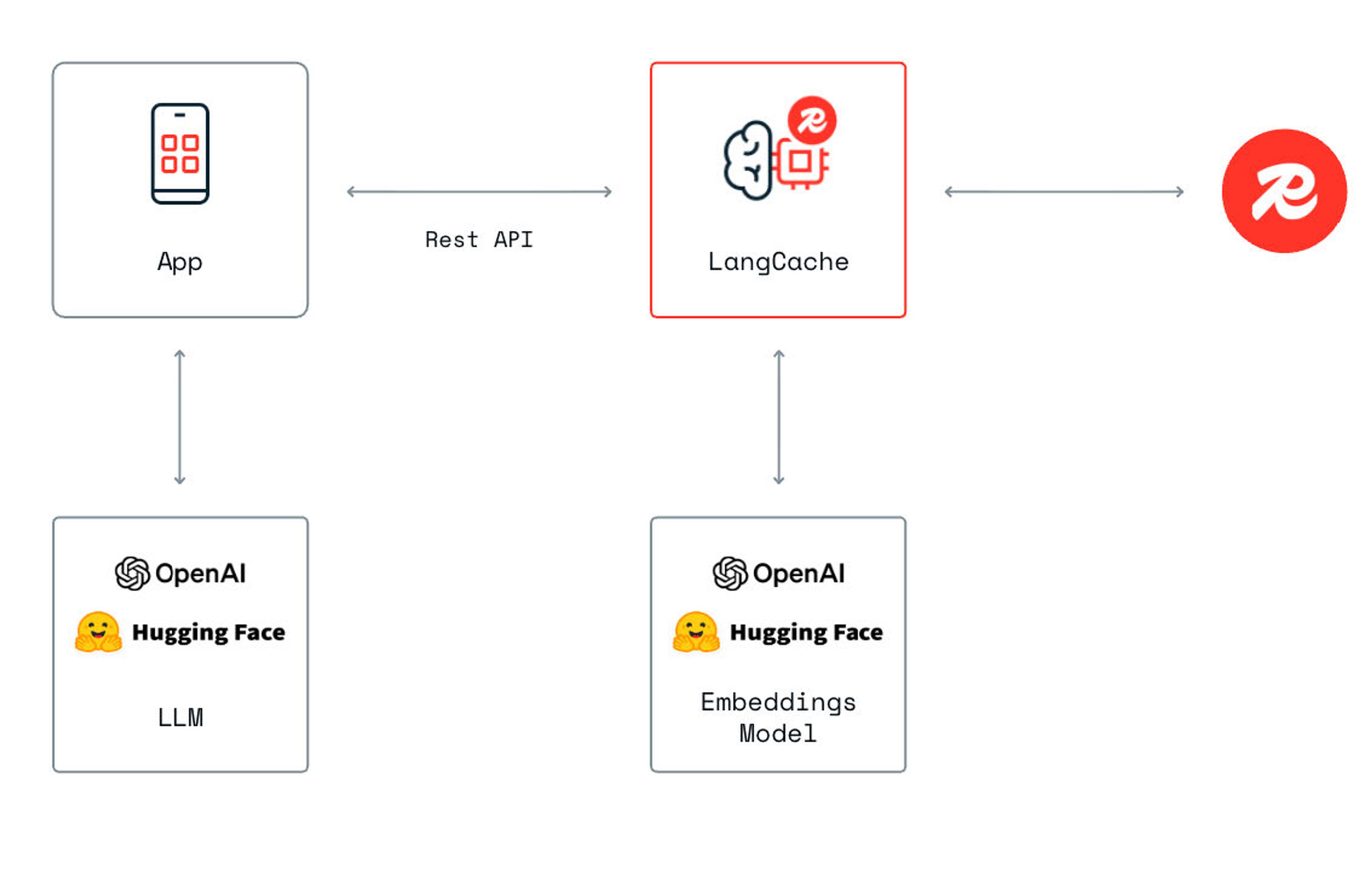

LangCache offers fully managed semantic caching

For teams who want the benefits of semantic caching without managing infrastructure, Redis offers LangCache—a fully managed caching service.

LangCache automatically manages storage, similarity checks, and LLM fallbacks through a simple REST API. Ideal for teams that want semantic caching without managing servers or clusters.

Key benefits:

- No infrastructure overhead – Fully managed, scalable, and reliable.

- Observability and metrics – Monitor cache hit rates and query efficiency.

- Next-gen features — “LLM-as-a-judge” scoring for automated response evaluation.

LangCache entered public preview in September 2025 as part of Redis’ Fall Release, making it the fastest way to accelerate chatbot performance and efficiency.

RedisVL is the developer gateway to Redis-powered AI

RedisVL—the Redis Vector Library—is an open-source Python client that simplifies integration with Redis. It works seamlessly with frameworks like LangChain, LlamaIndex, and LiteLLM, giving devs an easy path to implement RAG, vector search, and chat memory directly from Python.

RedisVL abstracts away schema creation and low-level Redis commands, letting devs focus on building, not configuring. It’s free, fast, and built for real-world AI apps.

Redis integrates into a robust GenAI ecosystem

Redis integrates seamlessly with the modern GenAI stack—including LangChain, LangGraph, LlamaIndex, Amazon Bedrock, and more. It doesn’t replace these tools—it powers them.

Whether you’re orchestrating multi-agent workflows, routing LLMs, or managing embeddings — Redis provides the high-speed infrastructure layer that makes it all work in real time.

How Redis helped a leading global financial institution

A leading U.S. financial institution with over $1T AUM built an internal GenAI assistant to help advisors access enterprise knowledge. Their old keyword-based system (Solr) forced users to sift through irrelevant documents—or skip search entirely.

By rebuilding on Redis:

- They achieved hybrid RAG search (vector and keyword) with custom ranking.

- RedisVL and LangChain accelerated development.

- Azure OpenAI integration ensured compliance.

Results:

- GenAI adoption doubled within weeks.

- 90% of responses were rated positively.

- Redis scaled effortlessly as usage grew—no rearchitecture required.

The bottom line

Redis isn’t just a database, it’s the AI infrastructure layer that makes real-time, intelligent chatbots possible. From powering retrieval-augmented generation to managing memory, cache, and hybrid search, Redis gives devs and enterprises the tools to deliver chatbots that respond faster, scale smarter, and cost less.

If you’re building a chatbot for your own customers, employees, or data — Redis is the engine that makes it real.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.