For AIHow to Build a RAG GenAI Chatbot Using Vector Search with LangChain and Redis

#What you will learn in this tutorial

In this tutorial, you'll learn how to build a GenAI chatbot using

LangChain and Redis. You'll also learn how to use OpenAI's language model to generate responses to user queries and how to use Redis to store and retrieve data.Here's what's covered:

- E-Commerce App : A sample e-commerce application where users can search for products and ask questions about them, add them to their cart, and purchase them.

- Chatbot Architecture : The architecture of the chatbot, including the flow diagram, sample user prompt and it's AI response.

- Database setup : Generating OpenAI embeddings for products and storing them in Redis.

- Setting up the chatbot API : Creating a chatbot API that uses OpenAI and Redis to answer user questions and recommend products.

#Terminology

GenAI is a category of artificial intelligence that specializes in creating new content based on pre-existing data. It can generate a wide array of content types, including text, images, videos, sounds, code, 3D designs, and other media formats. Unlike traditional AI models that focus on analyzing and interpreting existing data, GenAI models learn from existing data and then use their knowledge to generate something entirely new.

LangChain is an innovative library for building language model apps. It offers a structured way to combine different components like language models (e.g., OpenAI's models), storage solutions (like Redis), and custom logic. This modular approach facilitates the creation of sophisticated AI apps, including chatbots.

OpenAI provides advanced language models like GPT-3, which have revolutionized the field with their ability to understand and generate human-like text. These models form the backbone of many modern AI apps, including chatbots.

#Microservices architecture for an e-commerce application

GITHUB CODEBelow is a command to the clone the source code for the application used in this tutorialgit clone --branch v9.2.0 https://github.com/redis-developer/redis-microservices-ecommerce-solutions

Lets take a look at the architecture of the demo application:

products service: handles querying products from the database and returning them to the frontendorders service: handles validating and creating ordersorder history service: handles querying a customer's order historypayments service: handles processing orders for paymentapi gateway: unifies the services under a single endpointmongodb/ postgresql: serves as the write-optimized database for storing orders, order history, products, etc.

INFOYou don't need to use MongoDB/ Postgresql as your write-optimized database in the demo application; you can use other prisma supported databases as well. This is just an example.

#E-commerce application frontend using Next.js and Tailwind

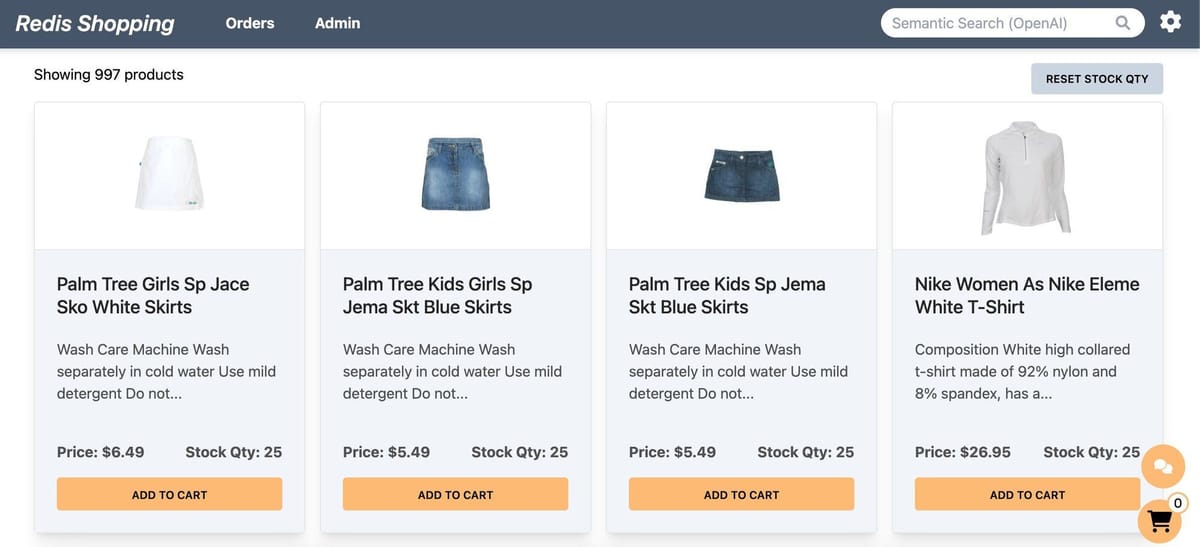

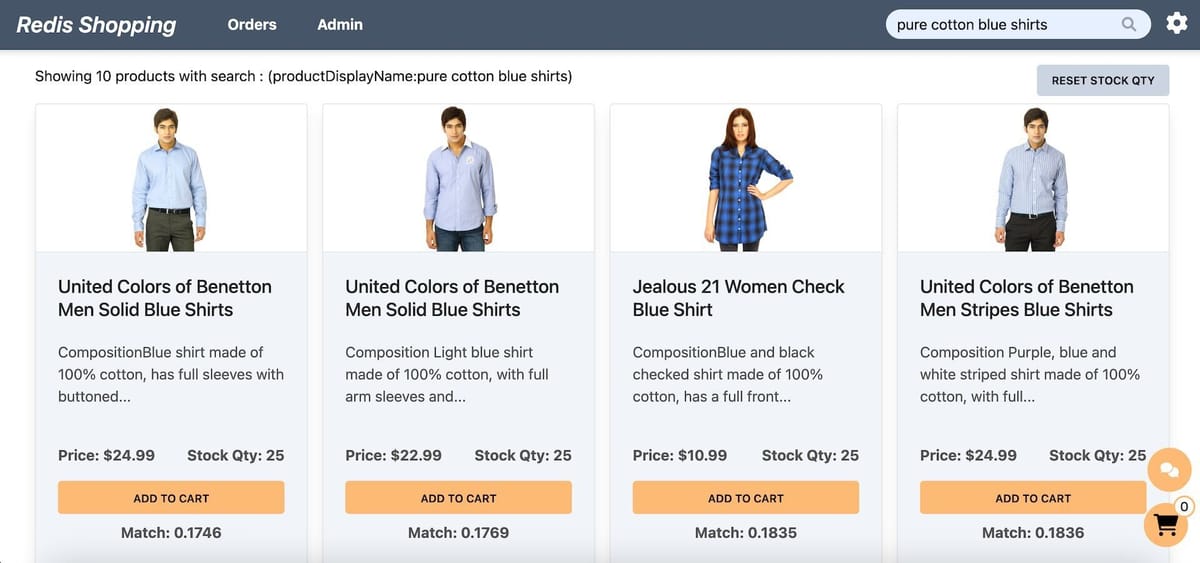

The e-commerce microservices application consists of a frontend, built using Next.js with TailwindCSS. The application backend uses Node.js. The data is stored in Redis and either MongoDB or PostgreSQL, using Prisma. Below are screenshots showcasing the frontend of the e-commerce app.

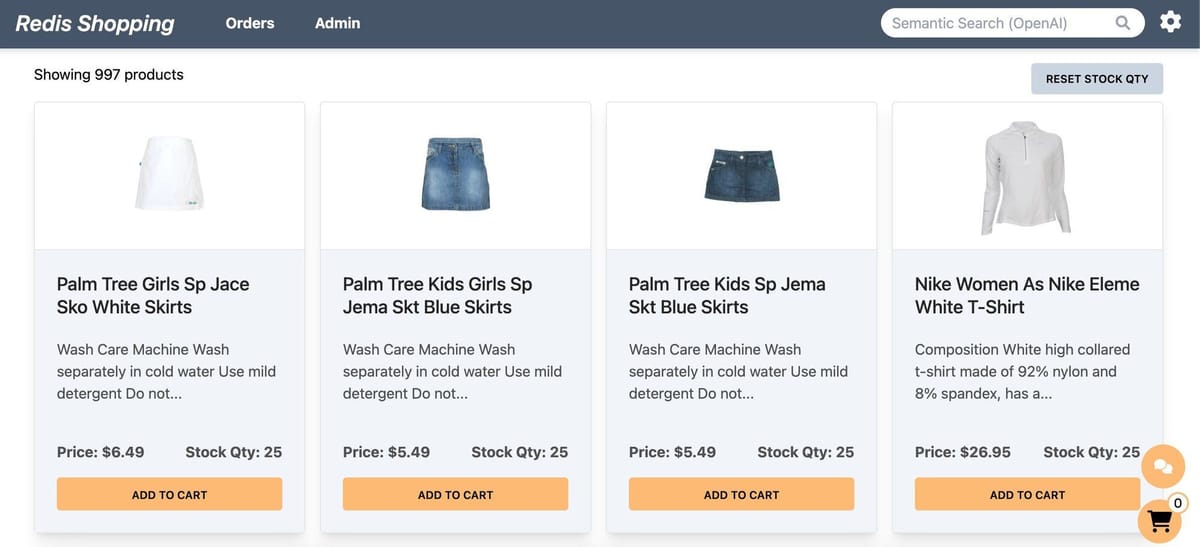

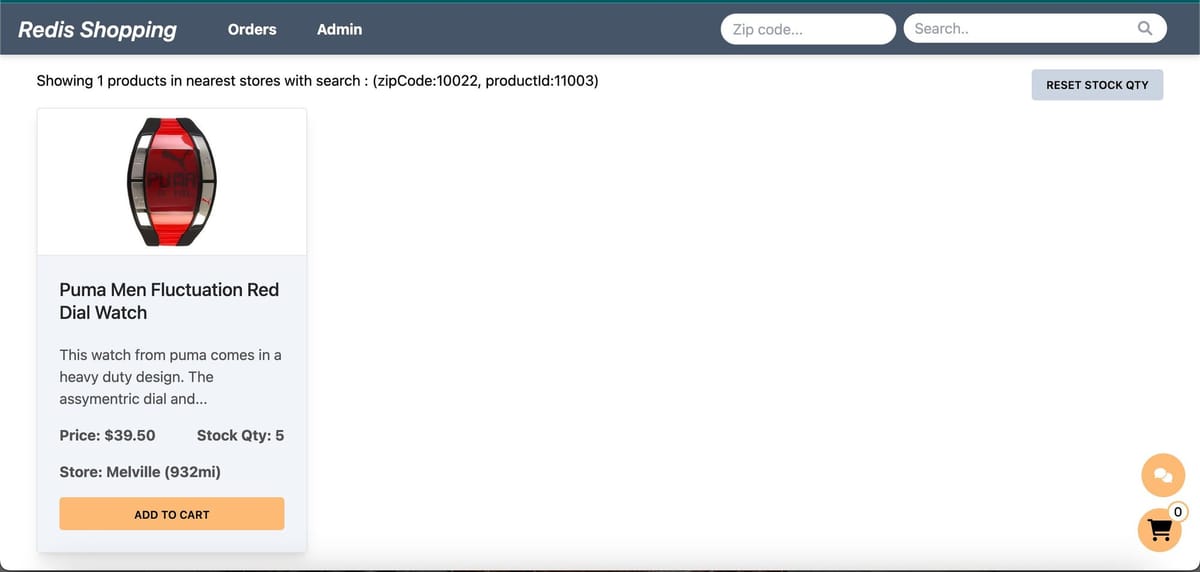

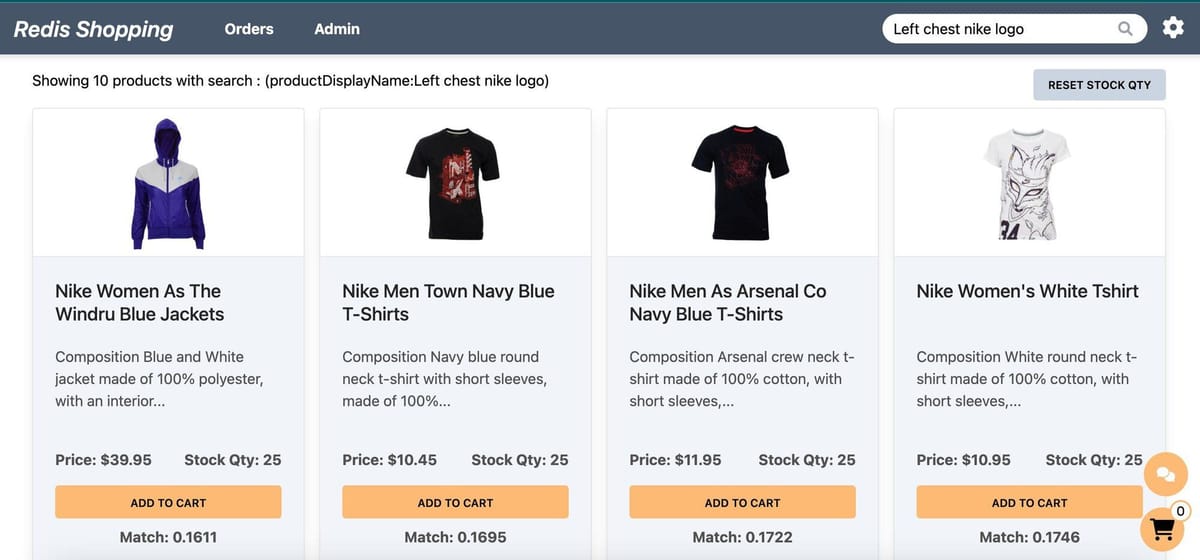

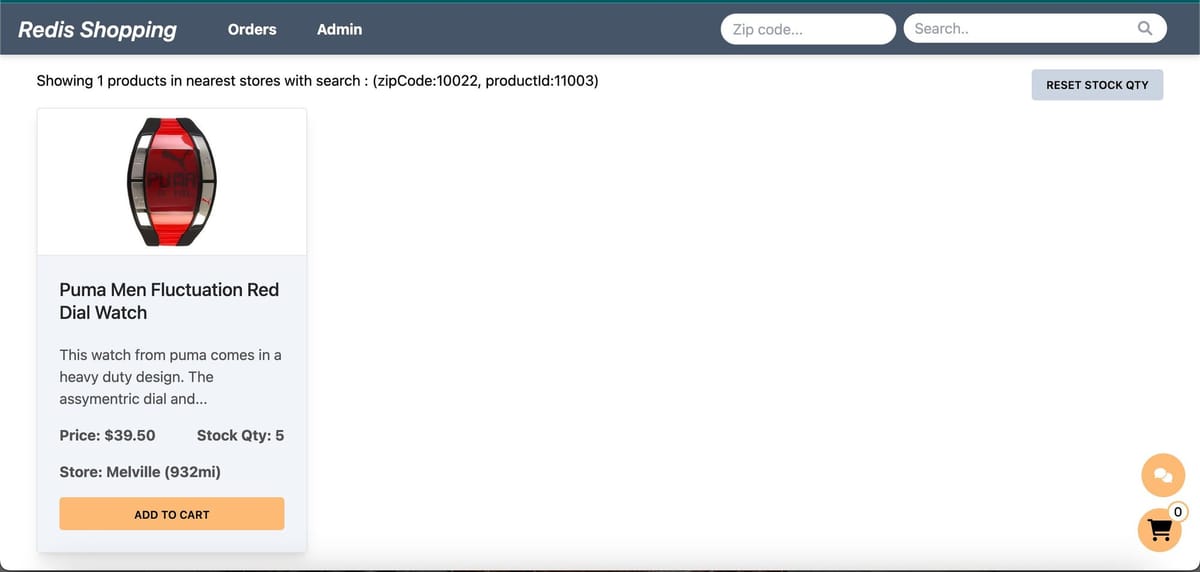

- Dashboard: Displays a list of products with different search functionalities, configurable in the settings page.

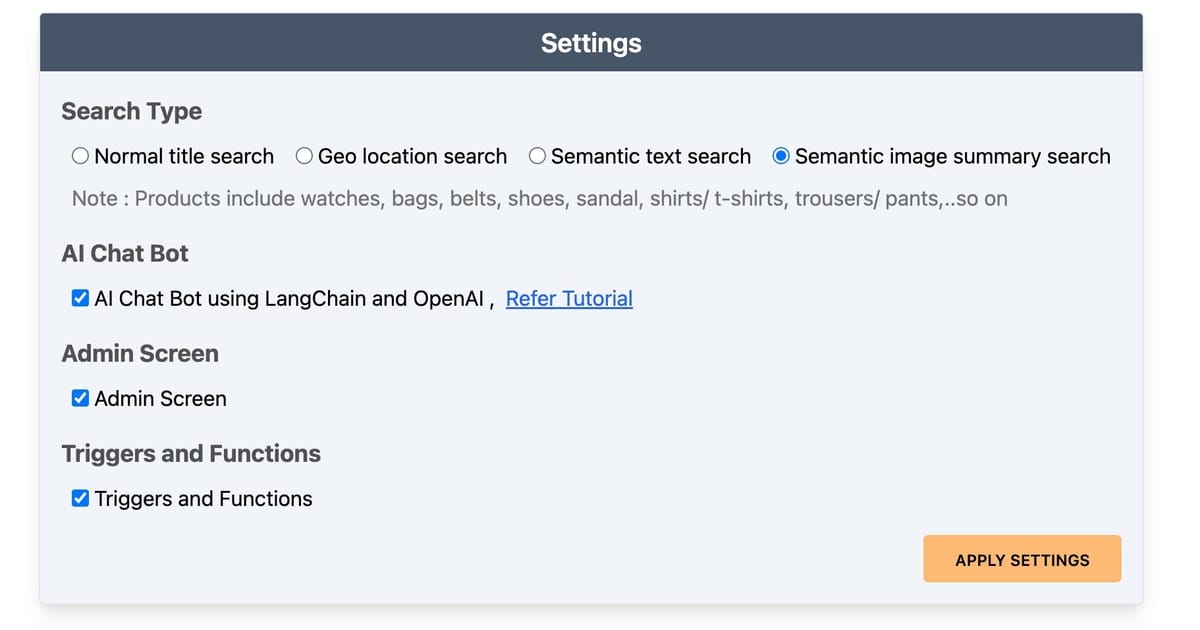

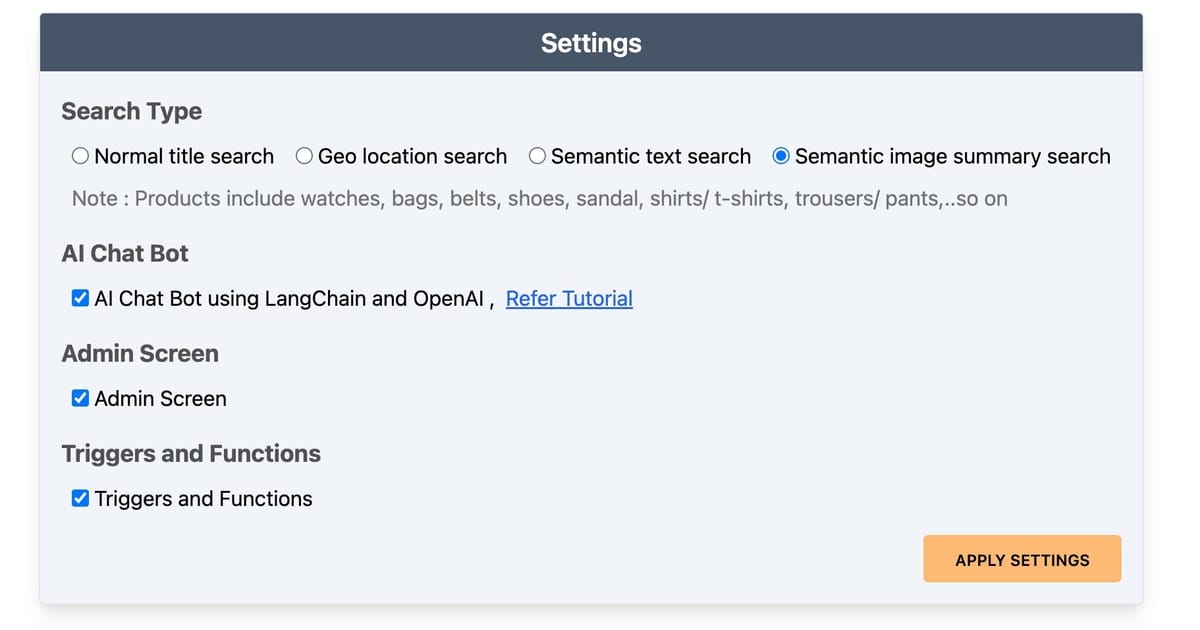

- Settings: Accessible by clicking the gear icon at the top right of the dashboard. Control the search bar, chatbot visibility, and other features here.

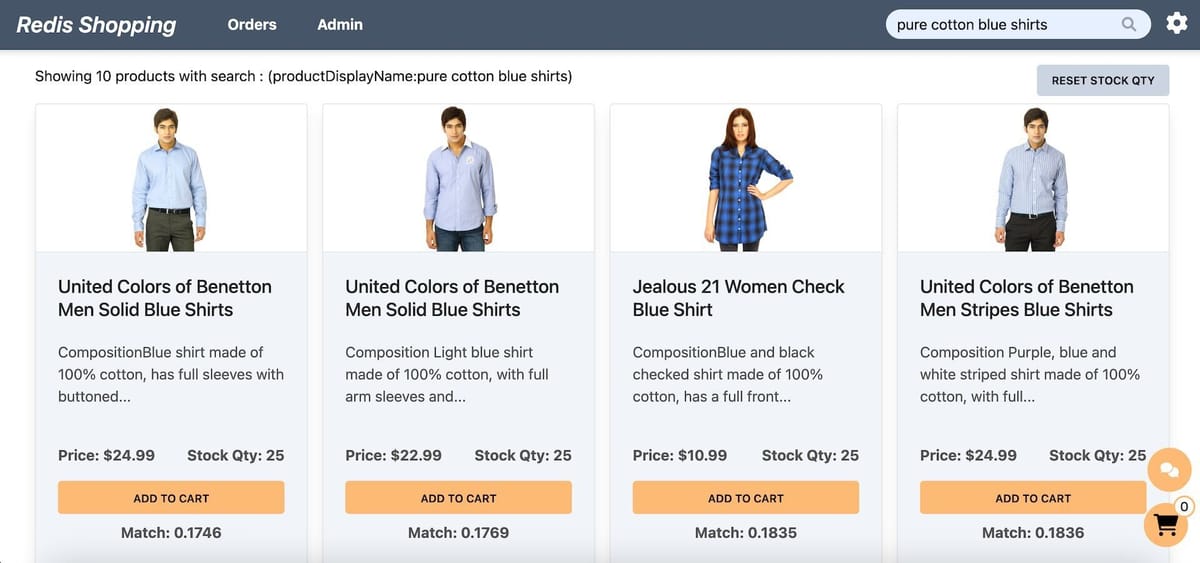

- Dashboard (Semantic Text Search): Configured for semantic text search, the search bar enables natural language queries. Example: "pure cotton blue shirts."

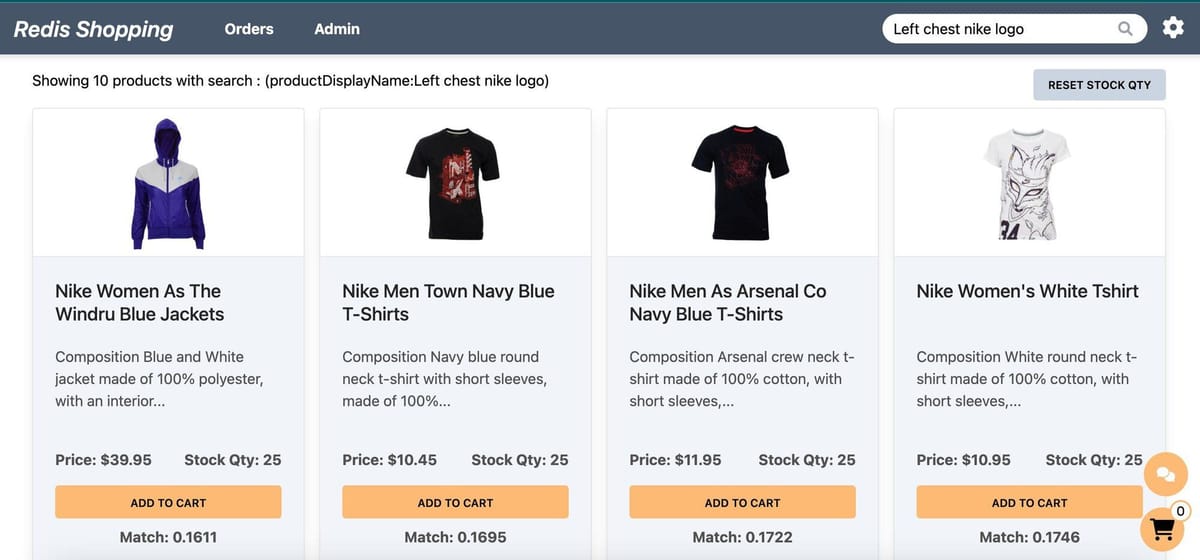

- Dashboard (Semantic Image-Based Queries): Configured for semantic image summary search, the search bar allows for image-based queries. Example: "Left chest nike logo."

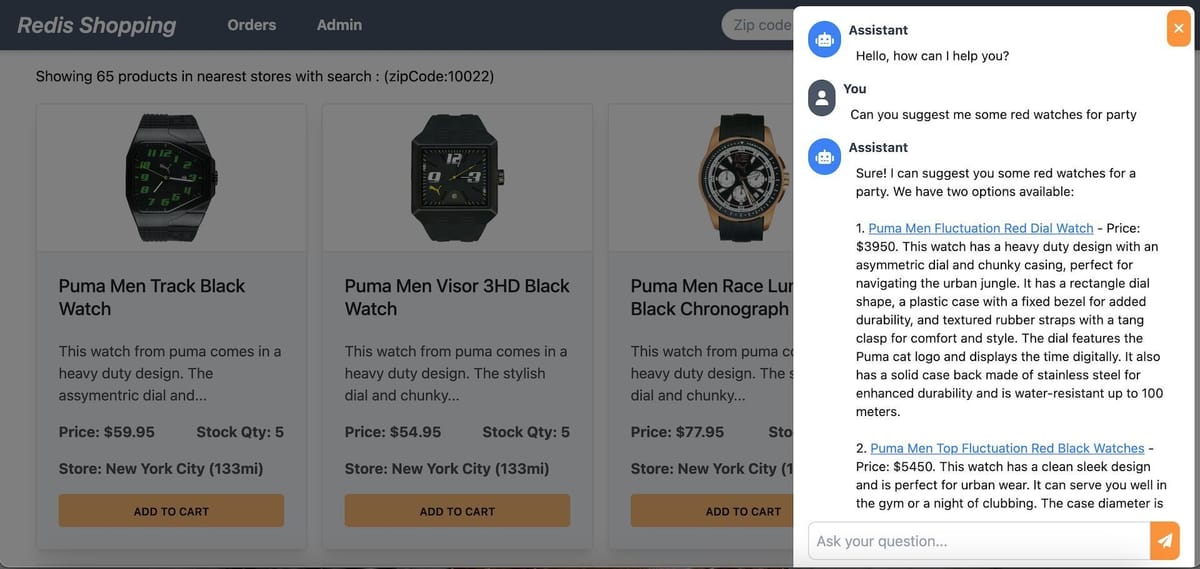

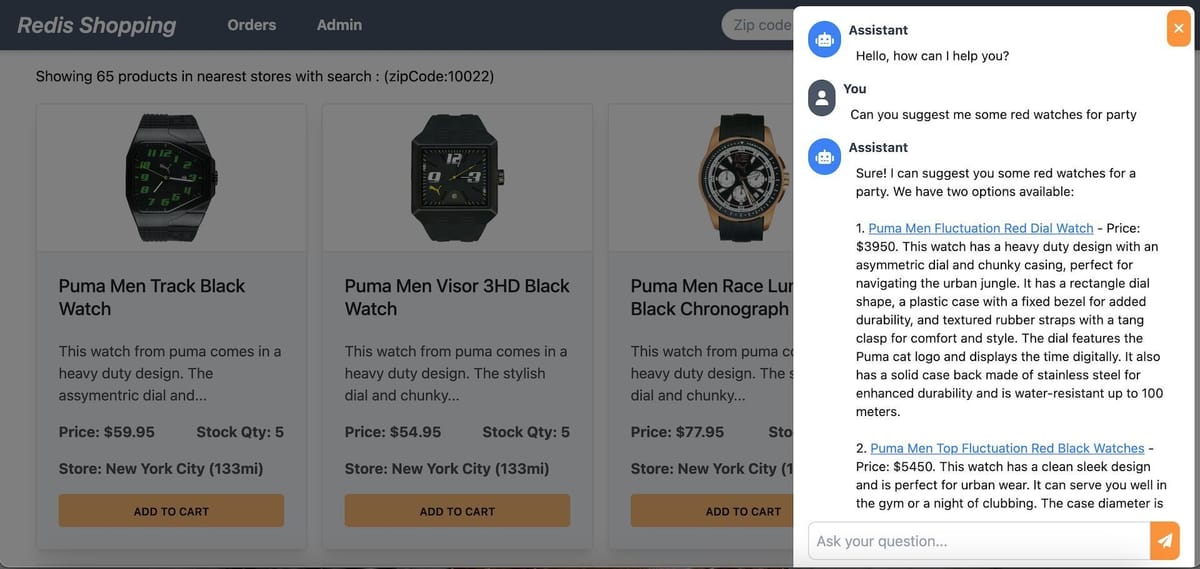

- Chat Bot: Located at the bottom right corner of the page, assisting in product searches and detailed views.

Selecting a product in the chat displays its details on the dashboard.

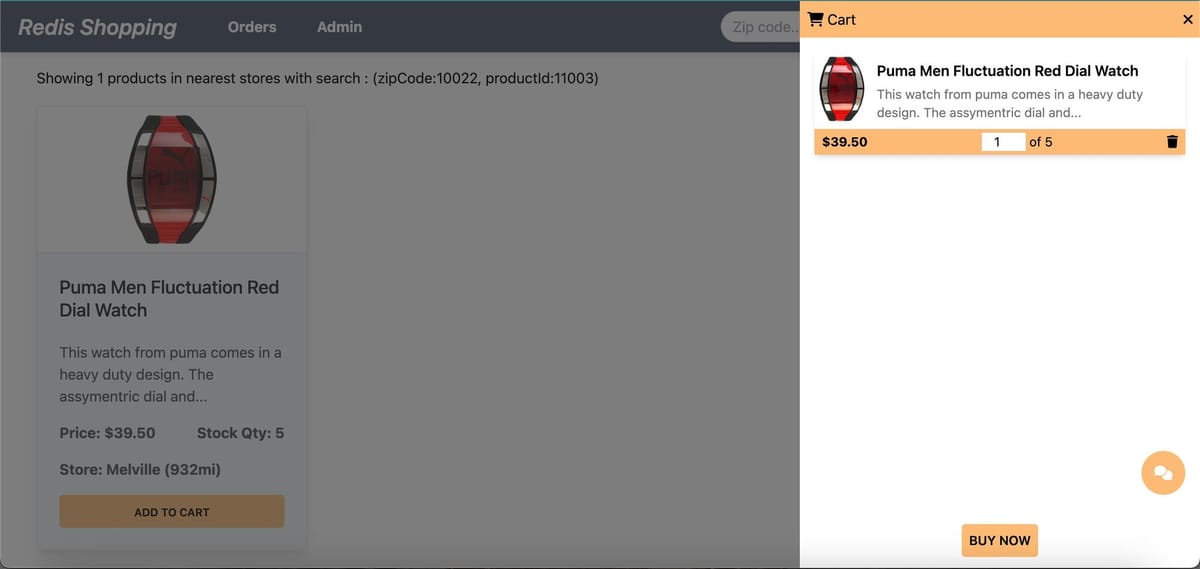

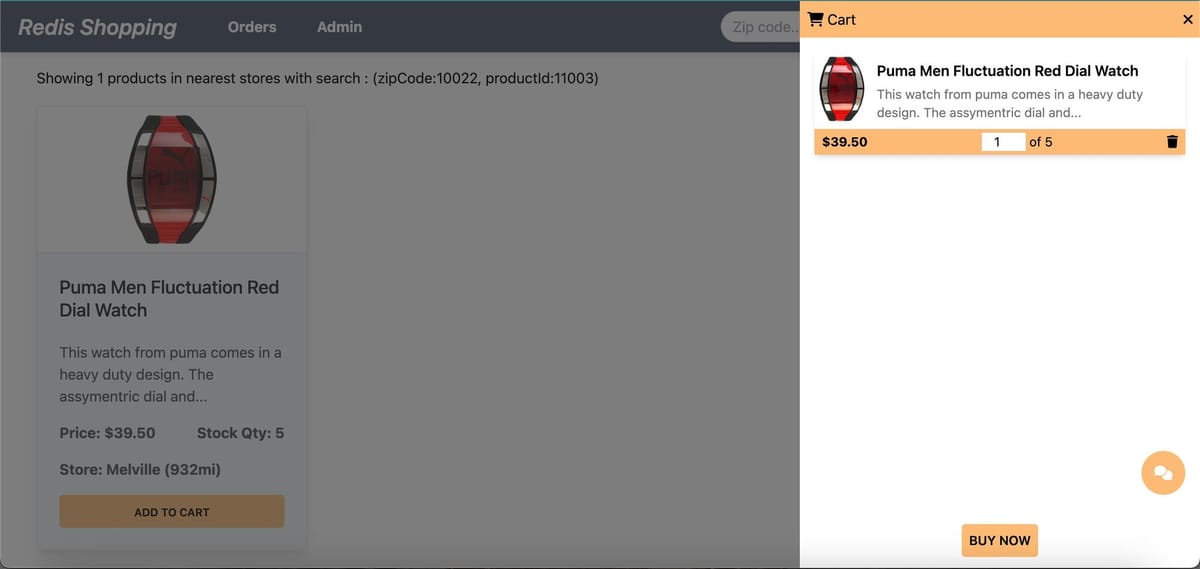

- Shopping Cart: Add products to the cart and check out using the "Buy Now" button.

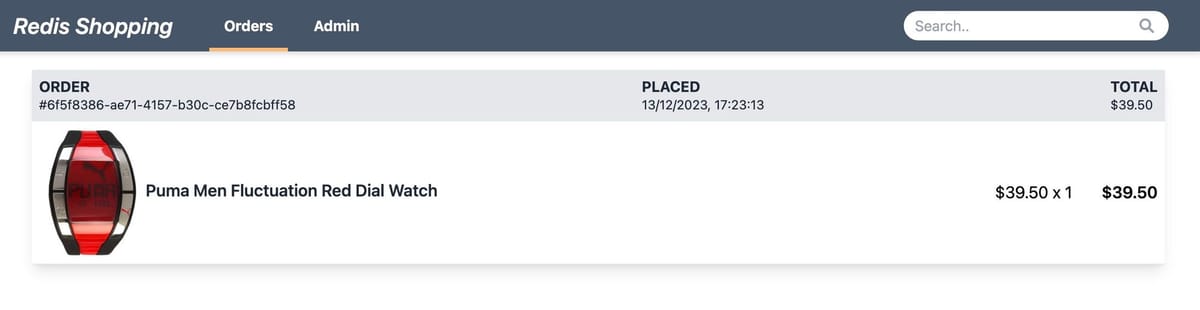

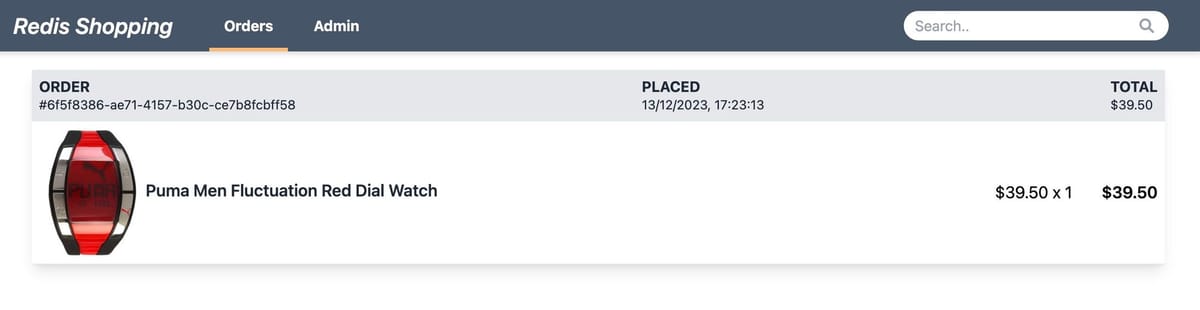

- Order History: Post-purchase, the 'Orders' link in the top navigation bar shows the order status and history.

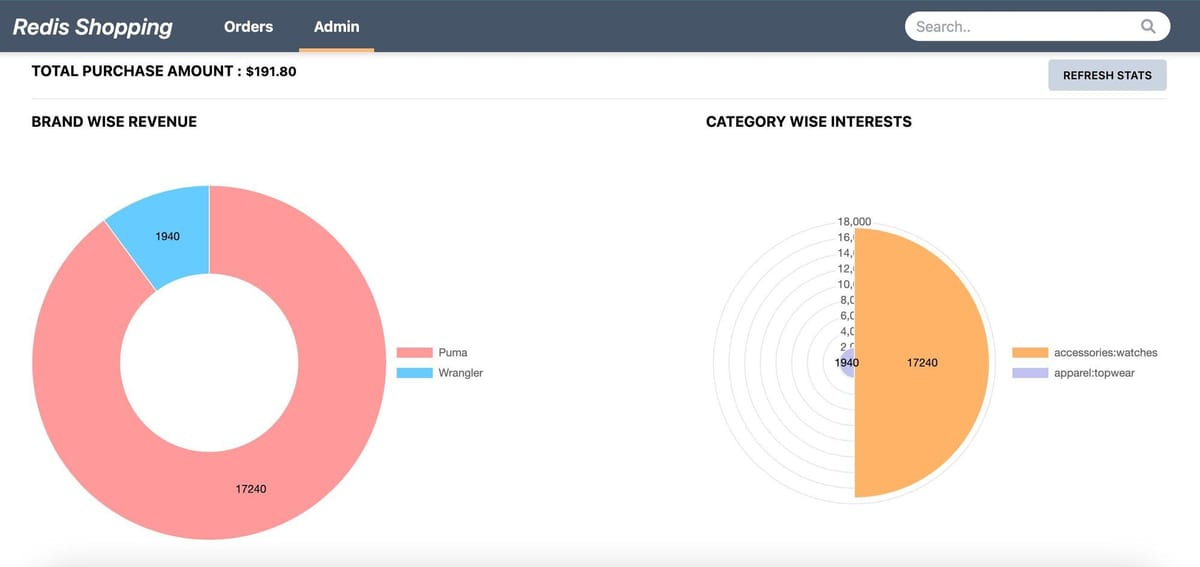

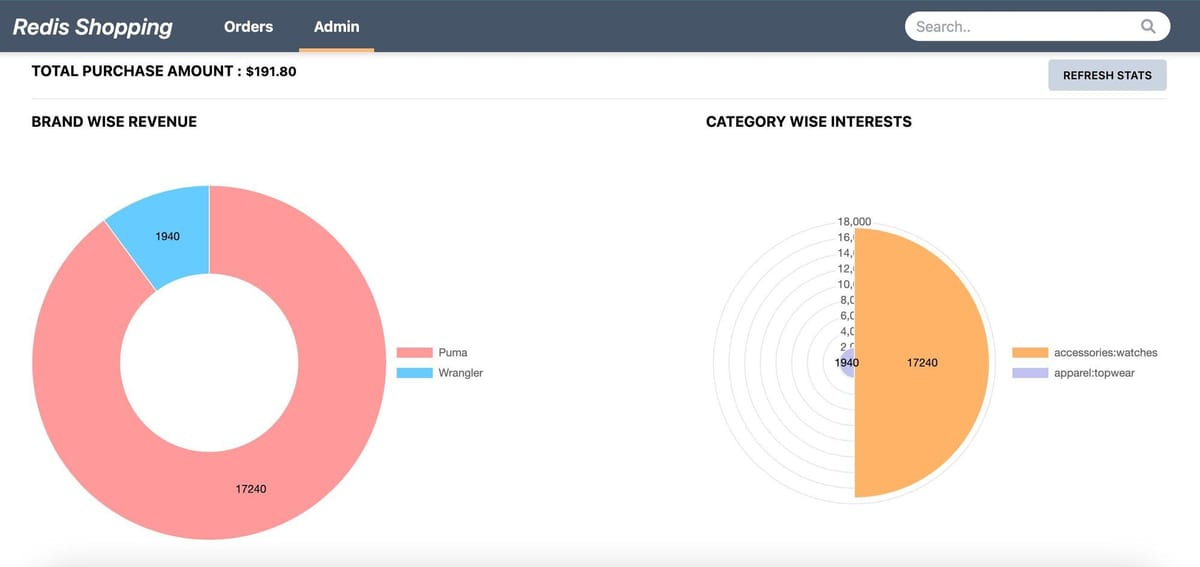

- Admin Panel: Accessible via the 'admin' link in the top navigation. Displays purchase statistics and trending products.

#Chatbot architecture

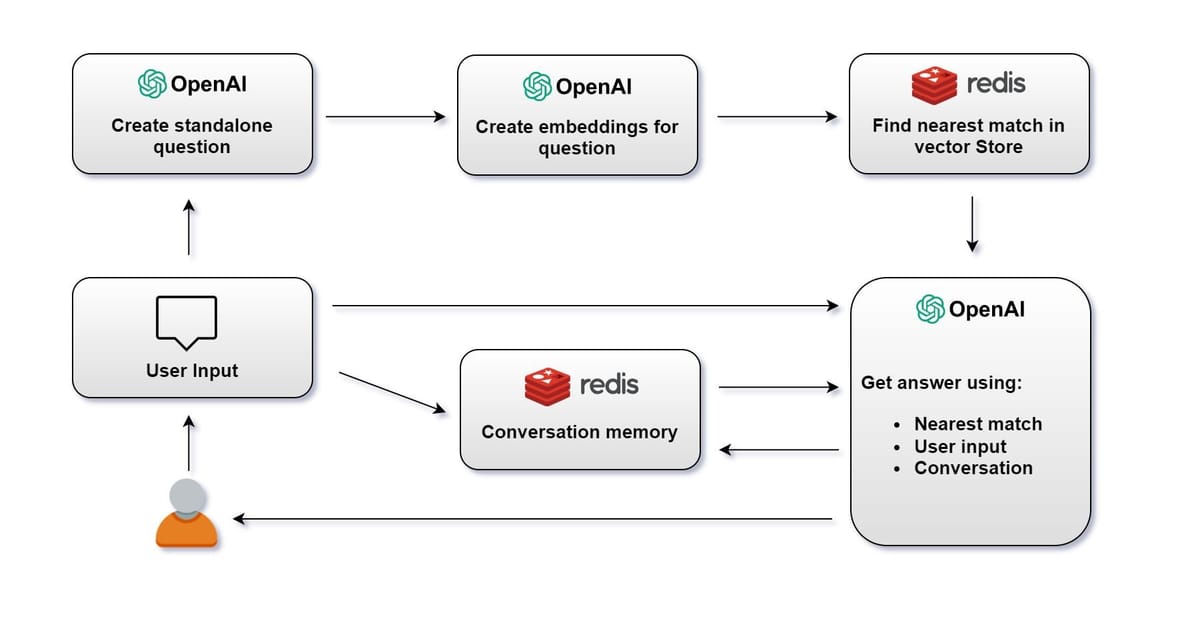

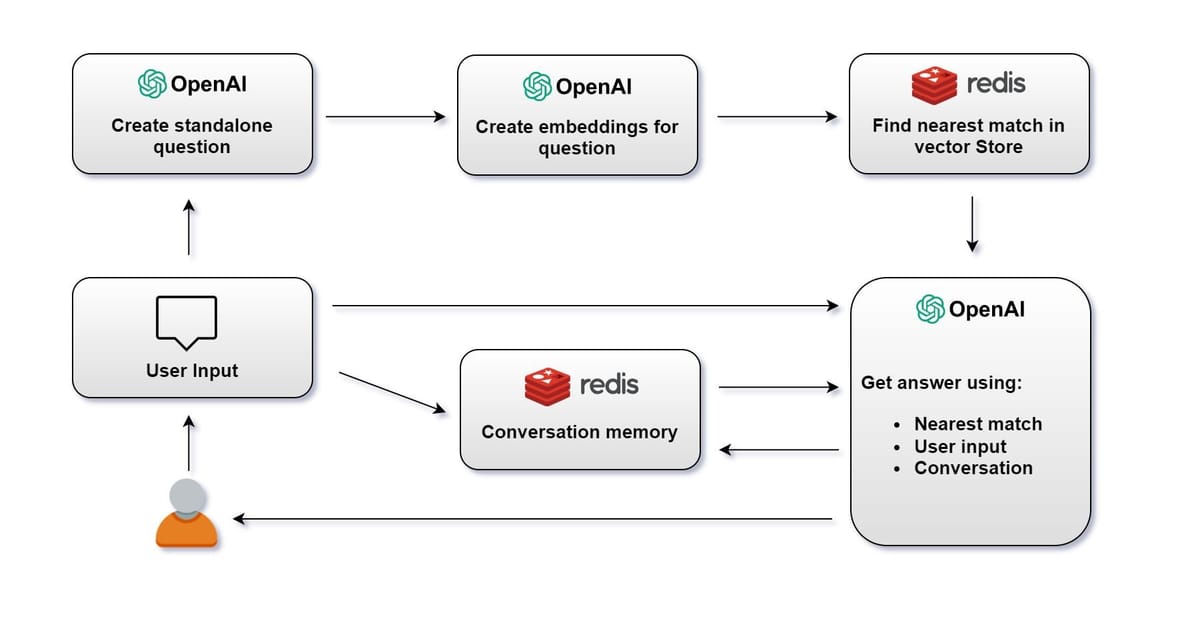

#Flow diagram

1> Create Standalone Question: Create a standalone question using OpenAI's language model.

A standalone question is just a question reduced to the minimum number of words needed to express the request for information.

2> Create Embeddings for Question: Once the question is created,

OpenAI's language model generates an embedding for the question.3> Find Nearest Match in Redis Vector Store: The embedding is then used to query

Redis vector store. The system searches for the nearest match to the question embedding among stored vectors4> Get Answer: With the user initial question, the nearest match from the vector store, and the conversation memory,

OpenAI's language model generates an answer. This answer is then provided to the user.Note : The system maintains a conversation memory, which tracks the ongoing conversation's context. This memory is crucial for ensuring the continuity and relevance of the conversation.

5> User Receives Answer: The answer is sent back to the user, completing the interaction cycle. The conversation memory is updated with this latest exchange to inform future responses.

#Sample user prompt and AI response

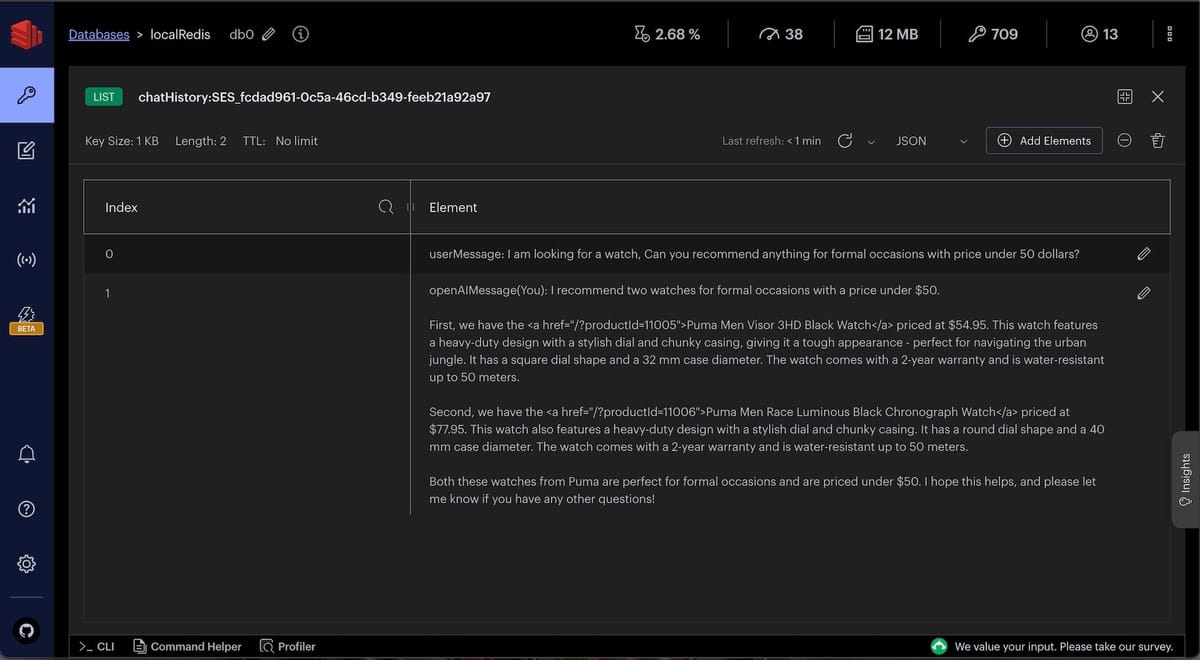

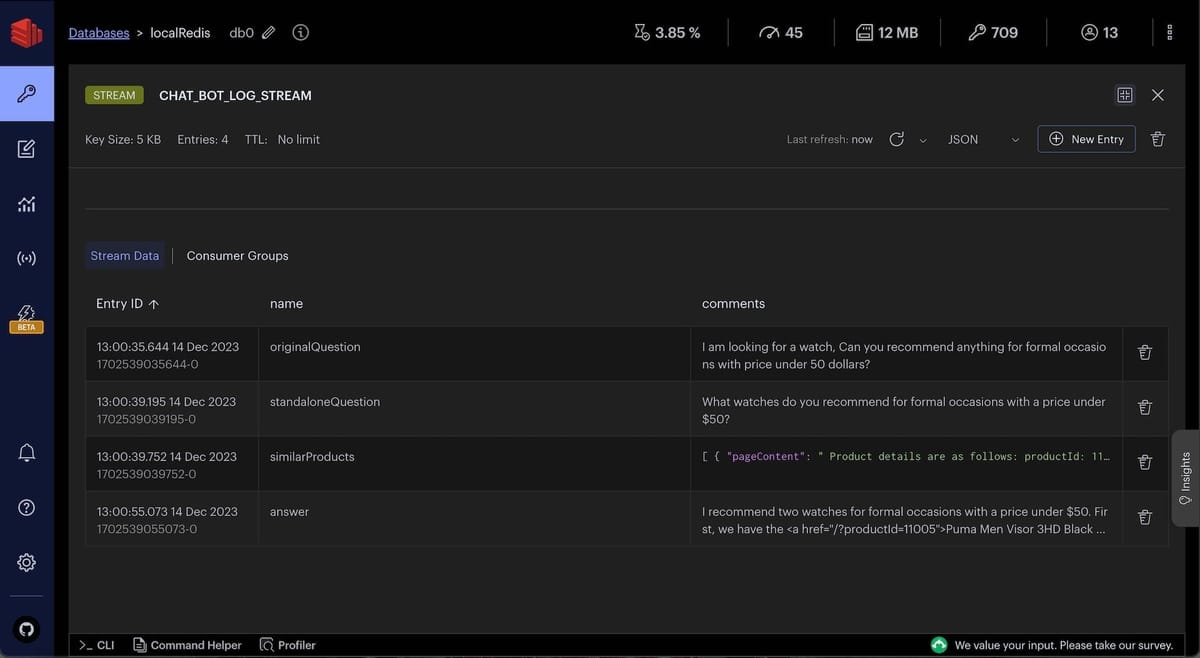

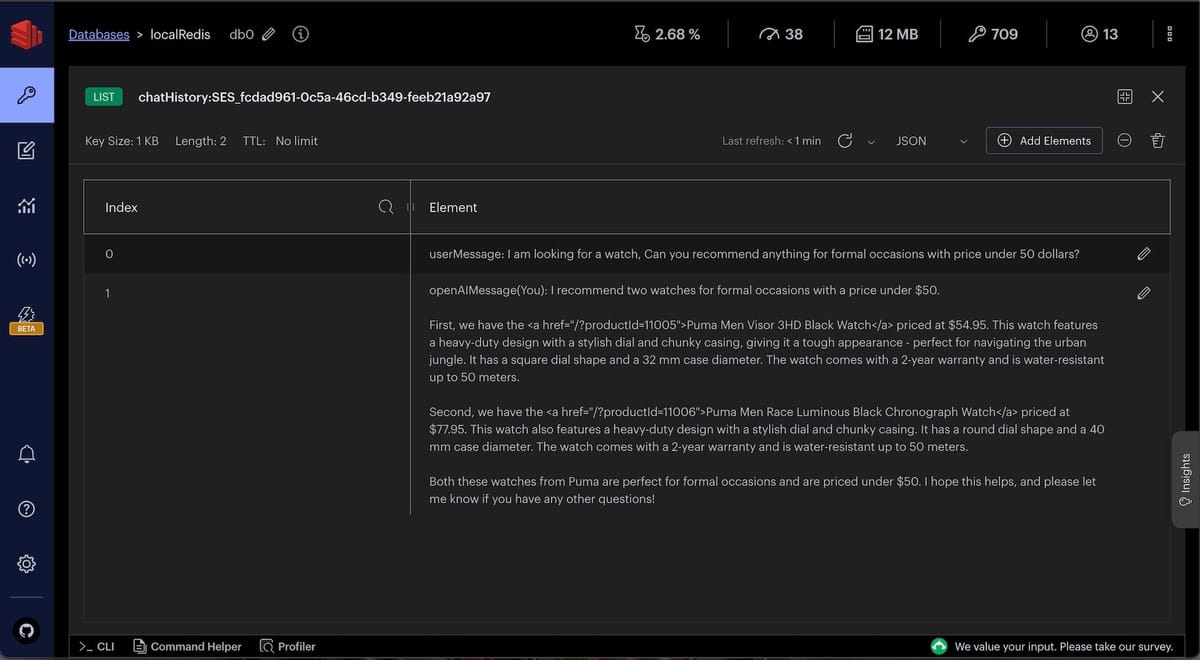

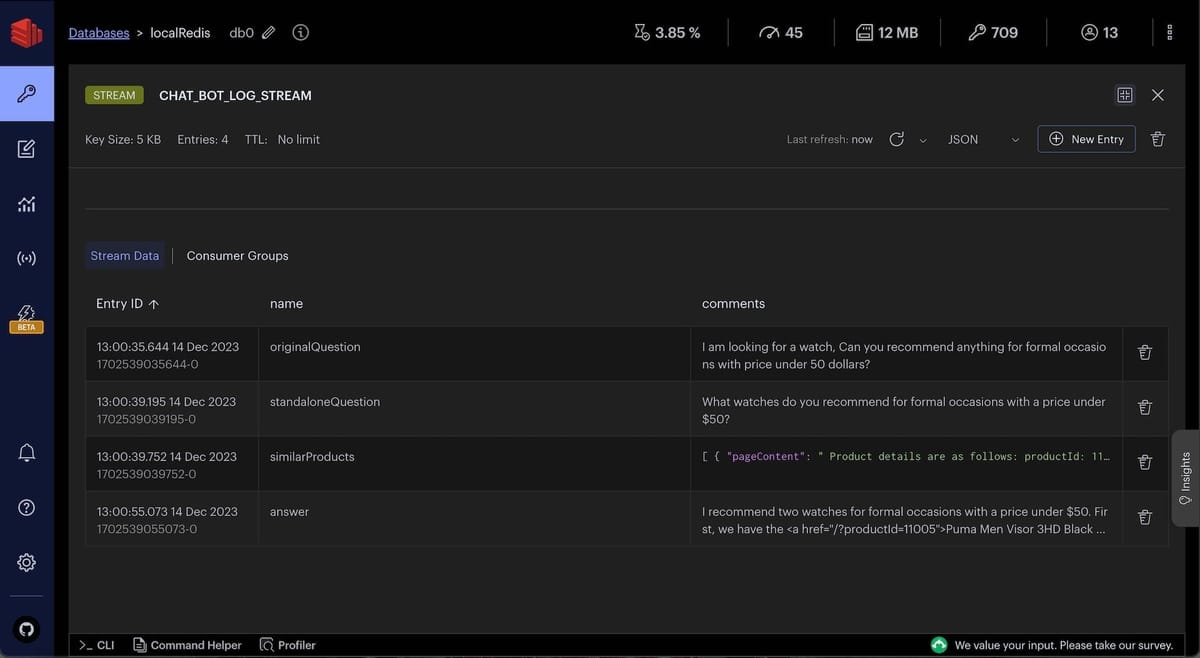

Say, OriginalQuestion of user is as follows:

I am looking for a watch, Can you recommend anything for formal occasions with price under 50 dollars?Converted standaloneQuestion by openAI is as follows:

What watches do you recommend for formal occasions with a price under $50?After vector search on Redis, we get the following similarProducts:

The final openAI response with above context and earlier chat history (if any) is as follows:

#Database setup

INFOSign up for an OpenAI account to get your API key to be used in the demo (add OPEN_AI_API_KEY variable in .env file). You can also refer to the OpenAI API documentation for more information.

GITHUB CODEBelow is a command to the clone the source code for the application used in this tutorialgit clone --branch v9.2.0 https://github.com/redis-developer/redis-microservices-ecommerce-solutions

#Sample data

For the purposes of this tutorial, let's consider a simplified e-commerce context. The

products JSON provided offers a glimpse into AI search functionalities we'll be operating on.#OpenAI embeddings seeding

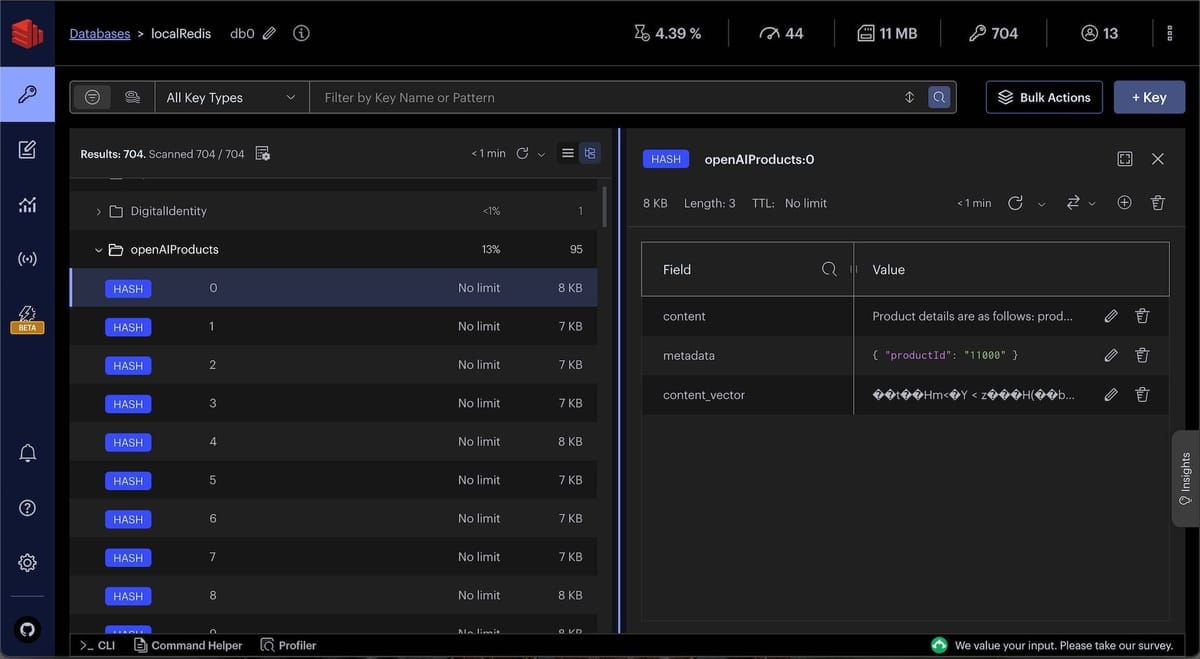

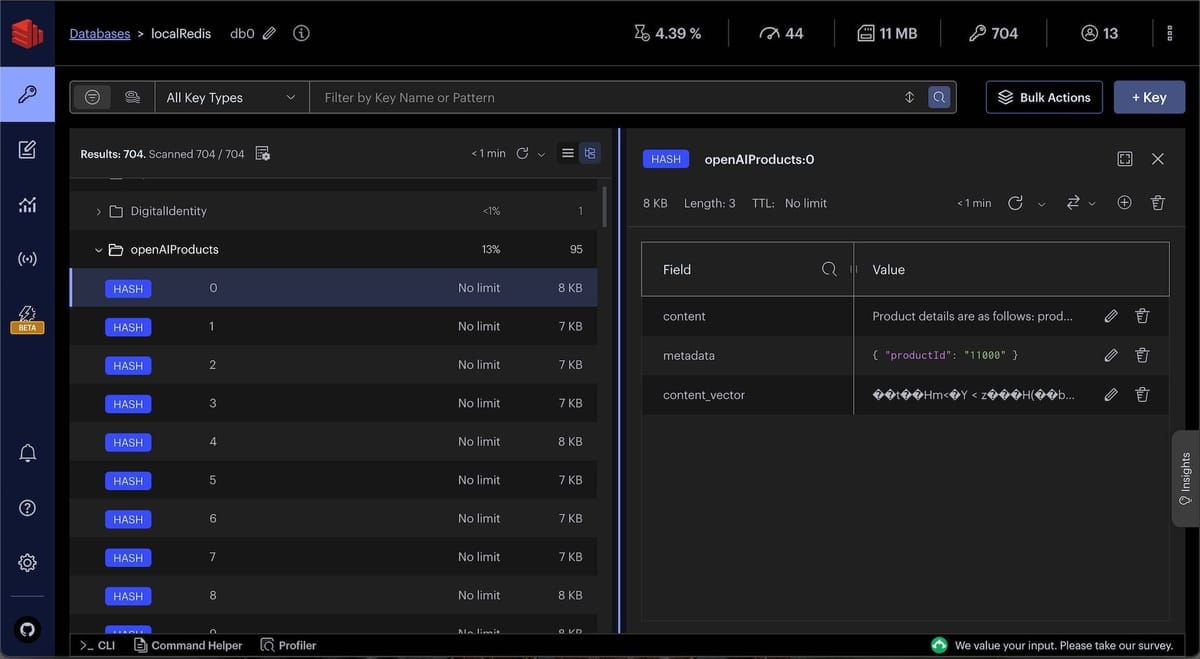

Below is the sample code to seed

products data as openAI embeddings into Redis.You can observe openAIProducts JSON in Redis Insight:

TIPDownload Redis Insight to visually explore your Redis data or to engage with raw Redis commands in the workbench.

#Setting up the chatbot API

Once products data is seeded as openAI embeddings into Redis, we can create a

chatbot API to answer user questions and recommend products.#API endpoint

The code that follows shows an example API request and response for the

chatBot API:Request:

Response:

#API implementation

When you make a request, it goes through the API gateway to the

products service. Ultimately, it ends up calling an chatBotMessage function which looks as follows:Below function converts the userMessage to standaloneQuestion using

openAIBelow function uses

Redis to find similar products for the standaloneQuestionBelow function uses

openAI to convert the standaloneQuestion, similar products from Redis and other context to a human understandable answer.You can observe chat history and intermediate chat logs in Redis Insight:

TIPDownload Redis Insight to visually explore your Redis data or to engage with raw Redis commands in the workbench.

#Ready to use Redis for GenAI chatbot?

Building a GenAI chatbot using LangChain and Redis involves integrating advanced AI models with efficient storage solutions. This tutorial covers the fundamental steps and code needed to develop a chatbot capable of handling e-commerce queries. With these tools, you can create a responsive, intelligent chatbot for a variety of applications