Blog

Redis on AWS: Powering real-time AI applications with Amazon Bedrock

Businesses in every industry are quickly using generative AI to power chatbots, copilots, automation, customer experiences, and smart apps. But when teams move from experiments to real production workloads, the same issues show up every time:

- LLM inference costs are high and unpredictable.

- Latency must be extremely low for real-time user experiences.

- Operational data in RDS and Aurora must be kept up to date for RAG.

- Agentic workflows require fast, durable memory and reasoning capabilities.

This is where Redis on AWS stands out. Purpose-built for AWS, Redis works with Amazon Bedrock, Agentcore, Redis Data Integration (RDI), and Redis Semantic Caching (LangCache) to deliver a real-time AI architecture that’s ready for production. Customers get lower and more predictable costs, consistently low latency, up-to-date data for RAG, and the performance agents need to operate reliably, all while integrating seamlessly with AWS services.

Redis: Designed for AWS with enterprise power

Redis fits seamlessly into the AWS ecosystem and aligns naturally with how teams already work, reinforcing existing workflows.. It supports native VPC connectivity with PrivateLink, integrates cleanly with IAM, and aligns with standard AWS monitoring, logging, and automation patterns. High availability, active-active global deployments, and managed scaling are built in, not bolted on later.

Because Redis is fully compatible with Redis OSS, teams can migrate without friction or rewrites. And for organizations that need more than basic caching, Redis delivers enterprise-grade durability, vector search, and support for autonomous agents, giving AI workloads the reliability and intelligence they need to run in production.

Enhancing AI Apps: Redis with Amazon Bedrock

Amazon Bedrock provides secure, scalable access to leading foundation models including Claude, Llama, Titan, Command-R, Cohere, and more. Redis powers the real-time data layer that makes these models production-ready.

Together, Redis integrated with Amazon Bedrock enables:

- Lightning-fast vector search to ground LLMs with up-to-date enterprise data

- Semantic caching to accelerate LLM queries and reduce redundant calls

- Real-time data ingestion from Aurora and RDS through RDI

- Agentic reasoning loops backed by Agentcore

This tight alignment dramatically reduces operational complexity and accelerates adoption of Bedrock-powered applications.

Introducing Redis Semantic Caching (LangCache): Faster, cheaper, smarter AI

Generative AI workloads frequently repeat similar prompts. Without optimization, applications may repeatedly invoke Bedrock models unnecessarily—driving both cost and latency.

Redis Semantic Caching changes the equation.

How it works

Redis stores the embedding of each prompt alongside the model’s response. When a new request comes in, Redis checks for semantic similarity against what’s already in the cache. If a close match exists, the answer is returned immediately. If not, the request continues to RAG and Amazon Bedrock for inference.

Why this matters

- Reduction in Amazon Bedrock inference cost

- Responses in milliseconds instead of hundreds of milliseconds

- Lower load on RAG pipelines and vector indexes

- Accelerated Agentcore reasoning loops

- Consistent, repeatable answers for recurring queries

Semantic caching becomes the “first stop” for every AI request—optimizing cost and performance while preserving accuracy.

Redis Data Integration: Bringing Aurora and RDS into Real-Time AI

Redis Data Integration, (RDI), is what keeps AI applications connected to the data that actually runs the business. Instead of relying on stale snapshots or heavy read traffic against production databases, RDI keeps Redis continuously in sync with what’s happening in AWS data stores. RDI supports:

- Change Data Capture from Amazon RDS and Amazon Aurora

- Streaming data ingestion from Amazon DynamoDB and Amazon S3

- Real-time syncing into Redis

- Optional automatic embedding pipelines

This ensures that any application built on AWS transactional databases can deliver live, current context to Bedrock-powered LLMs—without hitting operational databases or increasing load.

Want to use RDI in AWS? Join the public preview.

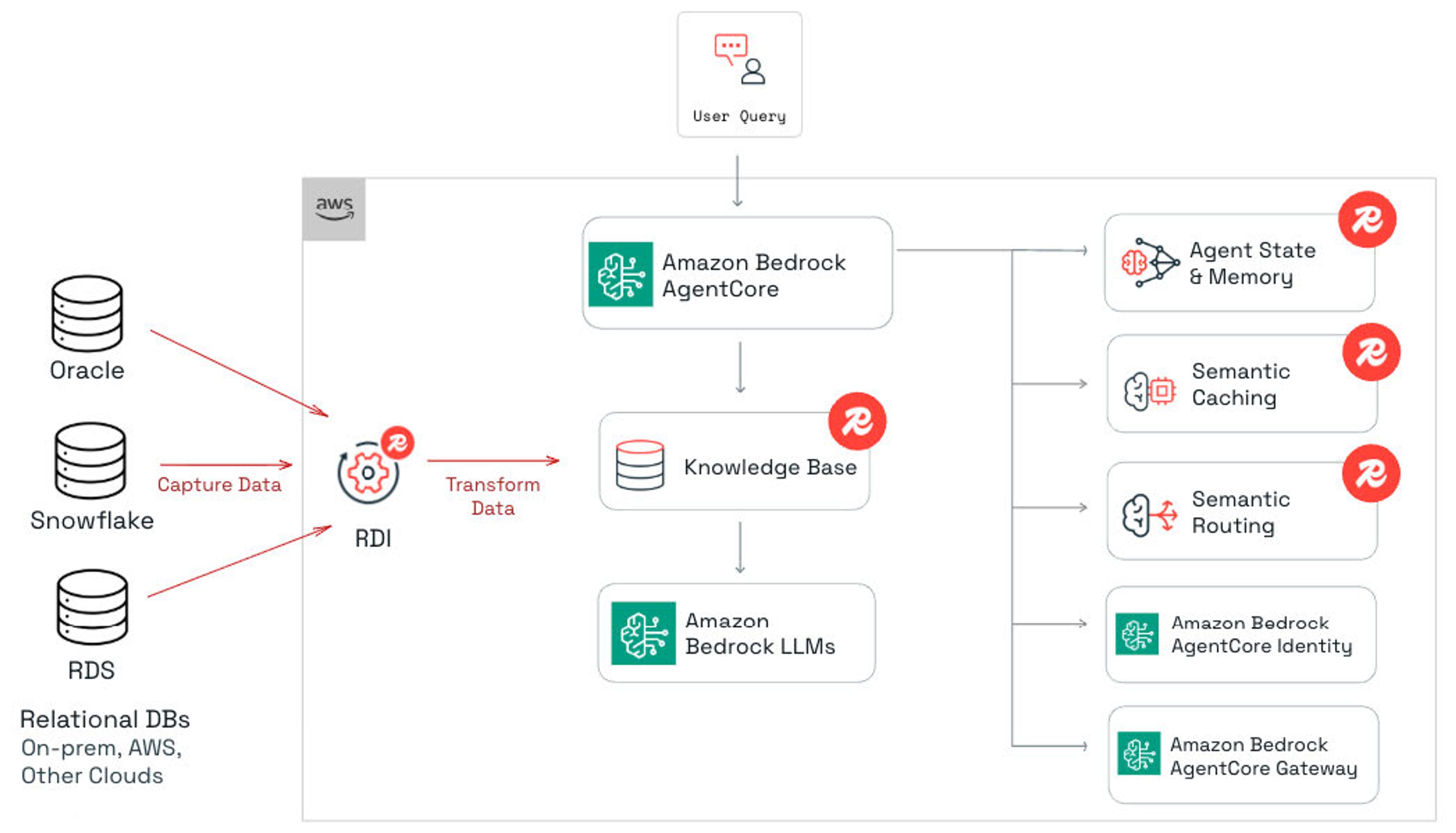

Reference Architecture: Redis on Amazon Bedrock, Semantic Caching, and RDI

Below is the high-level reference architecture showing how AWS and Redis components work together:

Enabling scalable AI apps on AWS

Redis doesn’t replace AWS services. It amplifies them. When Redis is part of the stack, customers tend to use more AWS because their AI workloads finally work at scale.

Redis increases demand for:

- Amazon Bedrock (more RAG pipelines and agentic workloads)

- Amazon Aurora / RDS (real-time sync and operational data growth)

- AWS compute services (AWS compute services (AWS Lambda, Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS)

- VPC networking (high-throughput data movement)

Semantic caching makes AI workloads efficient enough to scale to millions of users—driving greater Bedrock usage, not less, by enabling new use cases that would otherwise be cost-prohibitive.

Conclusion: The future of AI on AWS is real-time, cost-efficient, and Redis-powered

The future of AI on AWS is real time, cost-efficient, and built for production. Redis on AWS gives teams a practical path to get there. Semantic caching keeps costs under control while improving responsiveness. Vector search grounds LLMs in live data so outputs stay accurate. Agentcore turns reasoning into something you can actually operationalize. RDI connects Aurora and RDS directly into AI pipelines without stressing production systems. And everything fits cleanly into the AWS ecosystem teams already rely on.

For enterprises building intelligent applications on Amazon Bedrock, Redis provides the real-time infrastructure needed to scale with confidence. Fast where it matters, predictable on cost, and designed to support AI systems that move beyond demos and into everyday use.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.