Chunking Strategies Explained

About

Building LLM apps that actually work starts with better chunking. Ricardo Ferreira breaks down how smart text segmentation helps embeddings return answers that make sense. Learn how to structure content for context, reduce noise, and improve vector search accuracy. Whether you’re creating semantic search, RAG pipelines, or conversational agents, mastering chunking is the key to more reliable results.

Key topics

- Why chunking is critical for precise LLM responses

- How to balance chunk size for accuracy and context

- Fixed-size, content-aware, recursive, and semantic chunking strategies

- Practical LangChain examples to test each approach

- How to find the optimal chunk size for your use case

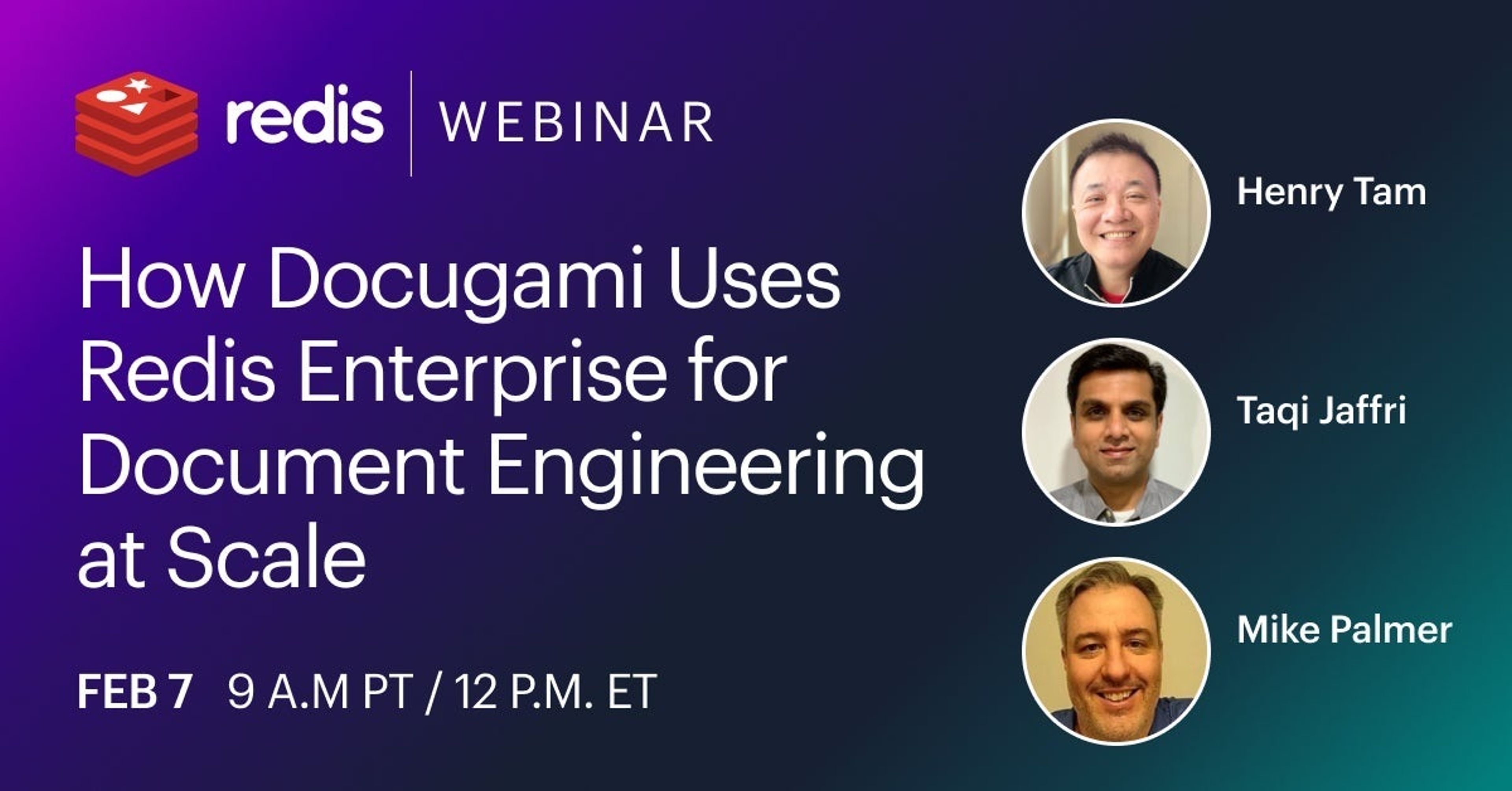

Speakers

Ricardo Ferreira

Principal Developer Advocate

Latest content

See allGet started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.