AI tech talk: LLM memory & vector databases

About

Traditional databases can’t keep up with what LLMs need—speed, flexibility, and zero roadblocks. This leaves developers stuck with performance bottlenecks and frustrating limits.

Redis sets a new standard for LLMs. Whether you're using LangChain to process Redis docs into semantic chunks or implementing Retrieval-Augmented Generation (RAG) workflows for sharper, more accurate responses, Redis delivers low-latency, high-performance memory management that keeps your apps moving fast.

Check out our tech talk to discover how Redis keeps LLM memory seamless and efficient.

Key topics

- Why vector databases are key to making modern AI work

- How Redis simplifies LLM memory management with tools like LangChain and RedisVL

- How RAG workflows—like reranking—deliver better results

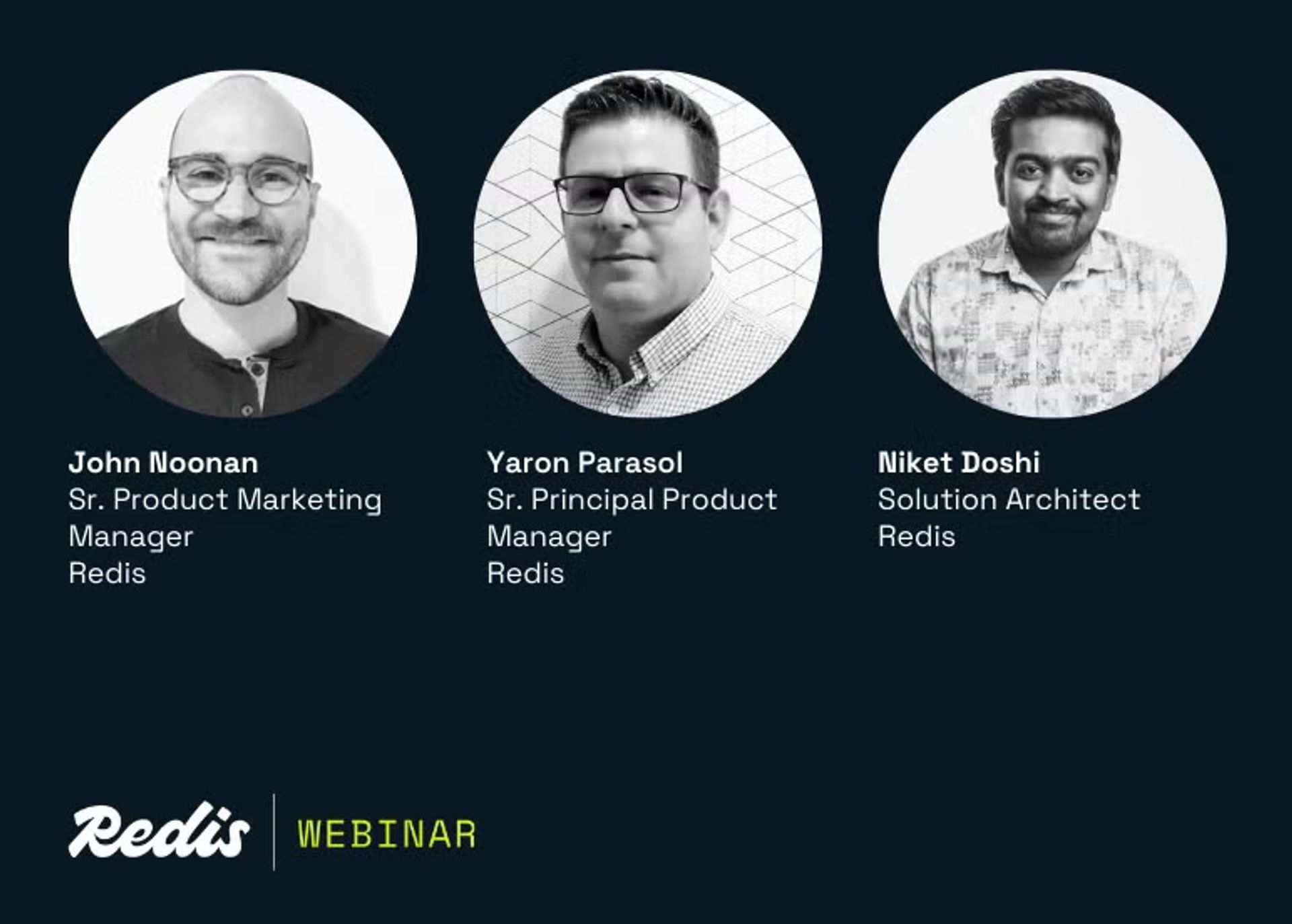

Speakers

Talon Miller

Principal Technical Marketer

Latest content

See allGet started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.