Configure high availability for replica shards

Configure high availability for replica shards so that the cluster automatically migrates the replica shards to an available node.

| Redis Software |

|---|

When you enable database replication, Redis Software creates a replica of each primary shard. The replica shard will always be located on a different node than the primary shard to make your data highly available. If the primary shard fails or if the node hosting the primary shard fails, then the replica is promoted to primary.

Without replica high availability (replica_ha) enabled, the promoted primary shard becomes a single point of failure as the only copy of the data.

Enabling replica_ha configures the cluster to automatically replicate the promoted replica on an available node. This automatically returns the database to a state where there are two copies of the data: the former replica shard which has been promoted to primary and a new replica shard.

An available node:

- Meets replica migration requirements, such as rack-awareness.

- Has enough available RAM to store the replica shard.

- Does not also contain the primary shard.

In practice, replica migration creates a new replica shard and copies the data from the primary shard to the new replica shard.

For example:

-

Node:2 has a primary shard and node:3 has the corresponding replica shard.

-

Either:

- Node:2 fails and the replica shard on node:3 is promoted to primary.

- Node:3 fails and the primary shard is no longer replicated to the replica shard on the failed node.

-

If replica HA is enabled, a new replica shard is created on an available node.

-

The data from the primary shard is replicated to the new replica shard.

- Replica HA follows all prerequisites of replica migration, such as rack-awareness.

- Replica HA migrates as many shards as possible based on available DRAM in the target node. When no DRAM is available, replica HA stops migrating replica shards to that node.

Configure high availability for replica shards

If replica high availability is enabled for both the cluster and a database, the database's replica shards automatically migrate to another node when a primary or replica shard fails. If replica HA is not enabled at the cluster level, replica HA will not migrate replica shards even if replica HA is enabled for a database.

Replica high availability is enabled for the cluster by default.

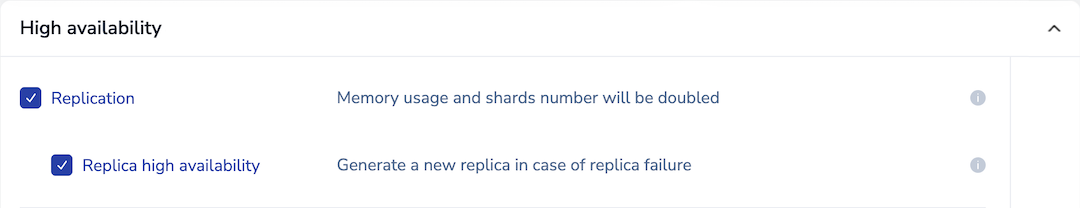

When you create a database using the Cluster Manager UI, replica high availability is enabled for the database by default if you enable replication.

To use replication without replication high availability, clear the Replica high availability checkbox.

You can also enable or turn off replica high availability for a database using rladmin or the REST API.

Configure cluster policy for replica HA

To enable or turn off replica high availability by default for the entire cluster, use one of the following methods:

-

rladmin tune cluster slave_ha { enabled | disabled } -

Update cluster policy REST API request:

PUT /v1/cluster/policy { "slave_ha": <boolean> }

Turn off replica HA for a database

To turn off replica high availability for a specific database using rladmin, run:

rladmin tune db db:<ID> slave_ha disabled

You can use the database name in place of db:<ID> in the preceding command.

Configuration options

You can see the current configuration options for replica HA with:

rladmin info cluster

Grace period

By default, replica HA has a 10-minute grace period after node failure and before new replica shards are created.

rladmin. Without manual database-level configuration, databases will show slave_ha: disabled (database) even when cluster-level ReplicaHA is enabled.To configure this grace period from rladmin, run:

rladmin tune cluster slave_ha_grace_period <time_in_seconds>

Shard priority

Replica shard migration is based on priority. When memory resources are limited, the most important replica shards are migrated first:

-

slave_ha_priority- Replica shards with higher integer values are migrated before shards with lower values.To assign priority to a database, run:

rladmin tune db db:<ID> slave_ha_priority <positive integer>You can use the database name in place of

db:<ID>in the preceding command. -

Active-Active databases - Active-Active database synchronization uses replica shards to synchronize between the replicas.

-

Database size - It is easier and more efficient to move replica shards of smaller databases.

-

Database UID - The replica shards of databases with a higher UID are moved first.

Cooldown periods

Both the cluster and the database have cooldown periods.

After node failure, the cluster cooldown period (slave_ha_cooldown_period) prevents another replica migration due to another node failure for any

database in the cluster until the cooldown period ends. The default is one hour.

After a database is migrated with replica HA,

it cannot go through another migration due to another node failure until the cooldown period for the database (slave_ha_bdb_cooldown_period) ends. The default is two hours.

To configure cooldown periods, use rladmin tune cluster:

-

For the cluster:

rladmin tune cluster slave_ha_cooldown_period <time_in_seconds> -

For all databases in the cluster:

rladmin tune cluster slave_ha_bdb_cooldown_period <time_in_seconds>

Alerts

The following alerts are sent during replica HA activation:

- Shard migration begins after the grace period.

- Shard migration fails because there is no available node (sent hourly).

- Shard migration is delayed because of the cooldown period.