What is write-through caching?

Imagine you've built a movie streaming app. You used PostgreSQL as your data store, and as you needed to scale you implemented caching using Redis. However, now you are experiencing slowness in reflecting of updated user profile or subscription.

For example, When a user purchases or modifies subscription, user expects the changes to be reflected immediately on his account so that the desired movie/ show of new subscription is enabled for watching. So you need a way of quickly providing strong consistency of user data. In such situation, What you need is called the "write-through pattern."

With the Write-through pattern, every time an application writes data to the cache, it also updates the records in the database, unlike Write behind the thread waits in this pattern until the write to the database is also completed.

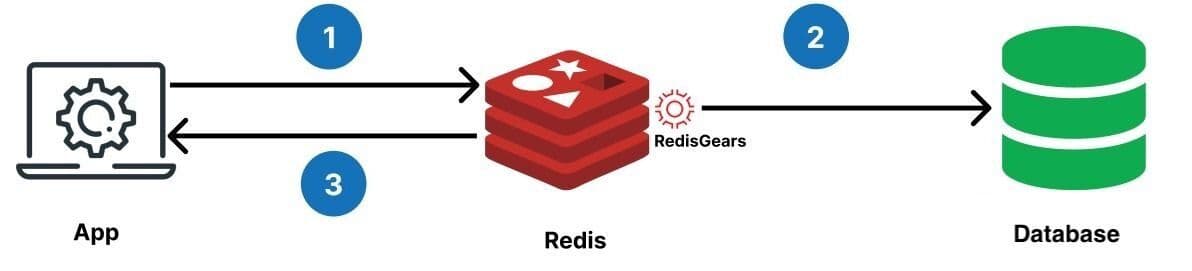

Below is a diagram of the write-through pattern for the application:

The pattern works as follows:

- The application reads and writes data to Redis.

- Redis syncs any changed data to the PostgreSQL database synchronously/ immediately.

Note : the Redis server is blocked until a response from the main database is received.

There are two related write patterns, and the main differences between them are as follows

| Write Behind | Write through |

|---|---|

| Syncs data asynchronously | Syncs data synchronously/ immediately |

| Data between the cache and the system of record (database) is inconsistent for a short time | Data between the cache and the system of record (database) is always consistent |

Learn more about Write behind pattern

Why you should use Redis for write-through caching

Write-through caching with Redis ensures that the (critical data) cache is always up-to-date with the database, providing strong consistency and improving application performance.

consider below scenarios of different applications :

- E-commerce application: In an e-commerce application, write-through caching can be used to ensure consistency of product inventory. Whenever a customer purchases a product, the inventory count should be updated immediately to avoid overselling. Redis can be used to cache the inventory count, and every update to the count can be written through to the database. This ensures that the inventory count in the cache is always up-to-date, and customers are not able to purchase items that are out of stock.

- Banking application: In a banking application, write-through caching can be used to ensure consistency of account balances. Whenever a transaction is made, the account balance should be updated immediately to avoid overdrafts or other issues. Redis can be used to cache the account balances, and every transaction can be written through to the database. This ensures that the balance in the cache is always up-to-date, and transactions can be processed with strong consistency.

- Online gaming platform: Suppose you have an online gaming platform where users can play games against each other. With write-through caching, any changes made to a user's score or game state would be saved to the database and also cached in Redis. This ensures that any subsequent reads for that user's score or game state would hit the cache first. This helps to reduce the load on the database and ensures that the game state displayed to users is always up-to-date.

- Claims Processing System: In an insurance claims processing system, claims data needs to be consistent and up-to-date across different systems and applications. With write-through caching in Redis, new claims data can be written to both the database and Redis cache. This ensures that different applications always have the most up-to-date information about the claims, making it easier for claims adjusters to access the information they need to process claims more quickly and efficiently.

- Healthcare Applications: In healthcare applications, patient data needs to be consistent and up-to-date across different systems and applications. With write-through caching in Redis, updated patient data can be written to both the database and Redis cache, ensuring that different applications always have the latest patient information. This can help improve patient care by providing accurate and timely information to healthcare providers.

- Social media application: In a social media application, write-through caching can be used to ensure consistency of user profiles. Whenever a user updates their profile, the changes should be reflected immediately to avoid showing outdated information to other users. Redis can be used to cache the user profiles, and every update can be written through to the database. This ensures that the profile information in the cache is always up-to-date, and users can see accurate information about each other.

Redis programmability for write-through caching using RedisGears

tip

You can skip reading this section if you are already familiar with RedisGears)

What is RedisGears?

RedisGears is a programmable serverless engine for transaction, batch, and event-driven data processing allowing users to write and run their own functions on data stored in Redis.

Functions can be implemented in different languages, including Python and C, and can be executed by the RedisGears engine in one of two ways:

- Batch: triggered by the Run action, execution is immediate and on existing data

- Event: triggered by the Register action, execution is triggered by new events and on their data

Some batch type operations RedisGears can do:

- Run an operation on all keys in the KeySpace or keys matching a certain pattern like :

- Prefix all KeyNames with

person: - Delete all keys whose value is smaller than zero

- Write all the KeyNames starting with

person:to a set

- Prefix all KeyNames with

- Run a set of operations on all(or matched) keys where the output of one operation is the input of another like

- Find all keys with a prefix

person:(assume all of them are of type hash) - Increase user's days_old by 1, then sum them by age group (10-20, 20-30 etc.)

- Add today's stats to the sorted set of every client, calculate last 7 days average and save the computed result in a string

- Find all keys with a prefix

Some event type operations RedisGears can do:

- RedisGears can also register event listeners that will trigger a function execution every time a watched key is changed like

- Listen for all operations on all keys and keep a list of all KeyNames in the KeySpace

- Listen for DEL operations on keys with a prefix

I-AM-IMPORTANT:and asynchronously dump them in a "deleted keys" log file - Listen for all HINCRBY operations on the element score of keys with a prefix

player:and synchronously update a user's level when the score reaches 1000

How do I use RedisGears?

Run the Docker container:

For a very simple example that lists all keys in your Redis database with a prefix of person: create the following python script and name it hello_gears.py :

Execute your function:

Using gears-cli

The gears-cli tool provides an easier way to execute RedisGears functions, specially if you need to pass some parameters too.

It's written in Python and can be installed with pip:

Usage:

RedisGears references

- RedisGears docs

- rgsync docs

- Installing RedisGears

- Introduction to RedisGears blog

- RedisGears GA - RedisConf 2020 video

- Conference talk video by creator of RedisGears

- Redis Gears sync with MongoDB

Programming Redis using the write-through pattern

For our sample code, we will demonstrate writing users to Redis and then writing through to PostgreSQL. Use the docker-compose.yml file below to setup required environment:

To run the docker-compose file, run the following command:

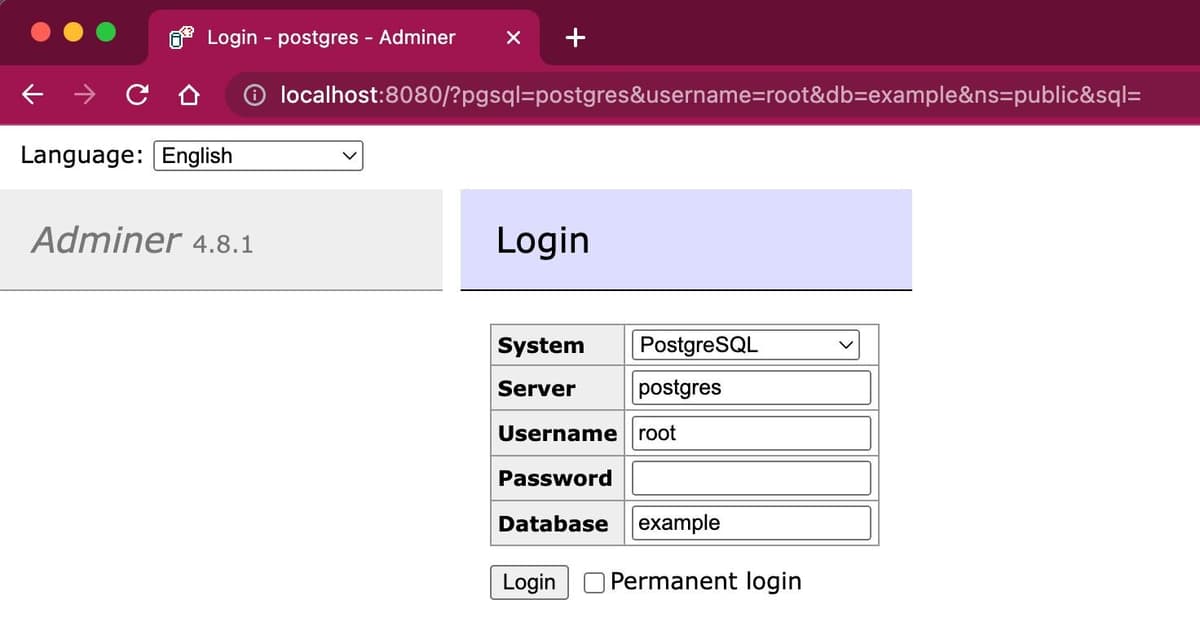

This will create a Redis server, a PostgreSQL server, and an Adminer server. Adminer is a web-based database management tool that allows you to view and edit data in your database.

Next, open your browser to http://localhost:8080/?pgsql=postgres&username=root&db=example&ns=public&sql=. You will have to input the password (which is password in the example above),

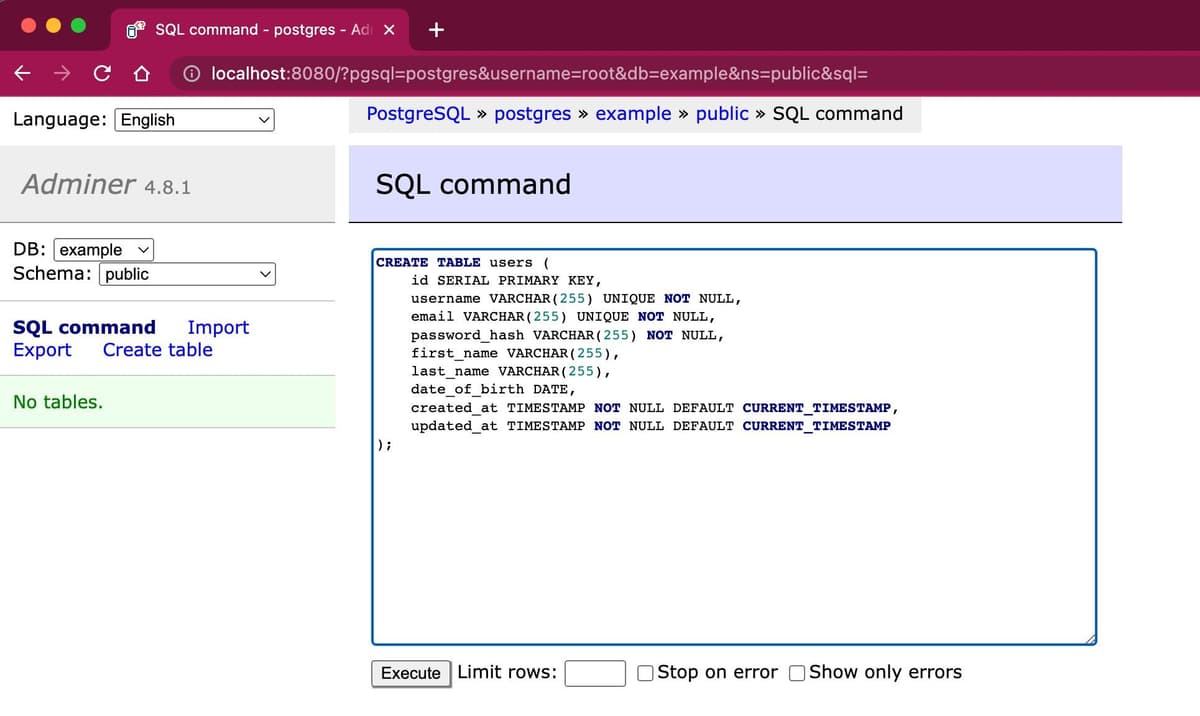

then you will be taken to a SQL command page. Run the following SQL command to create a table:

Developers need to load some code (say python in our example) to the Redis server before using the write-through pattern (which syncs data from Redis to the system of record). Redis server has a RedisGears module that interprets the python code and syncs the data from Redis to the system of record.

Now, we need to create a RedisGears recipe that will write through to the PostgreSQL database. The following Python code will write through to the PostgreSQL database:

Make sure you create the file "write-through.py" because the next instructions will use it. For the purpose of this example we are showing how to map Redis hash fields to PostgreSQL table columns. The RGWriteThrough function takes in the usersMapping, where the keys are the Redis hash keys and the values are the PostgreSQL table columns.

WHAT IS A REDISGEARS RECIPE?

A collection of RedisGears functions and any dependencies they may have that implement a high-level functional purpose is called a

recipe. Example : "RGJSONWriteThrough" function in above python code

The python file has a few dependencies in order to work. Below is the requirements.txt file that contains the dependencies, create it alongside the "write-through.py" file:

There are two ways (gears CLI and RG.PYEXECUTE) to load that Python file into the Redis server:

- Using the gears command-line interface (CLI)

Find more information about the Gears CLI at gears-cli and rgsync.

To run our write-through recipe using gears-cli, we need to run the following command:

You should get a response that says "OK". That is how you know you have successfully loaded the Python file into the Redis server.

TIP

If you are on Windows, we recommend you use WSL to install and use gears-cli.

2. Using the RG.PYEXECUTE from the Redis command line.

Tip

The RG.PYEXECUTE command can also be executed from the Node.js code (Consult the sample Node file for more details)

Tip

Find more examples in the Redis Gears GitHub repository.

Verifying the write-through pattern using RedisInsight

Tip

RedisInsight is the free redis GUI for viewing data in redis. Click here to download.

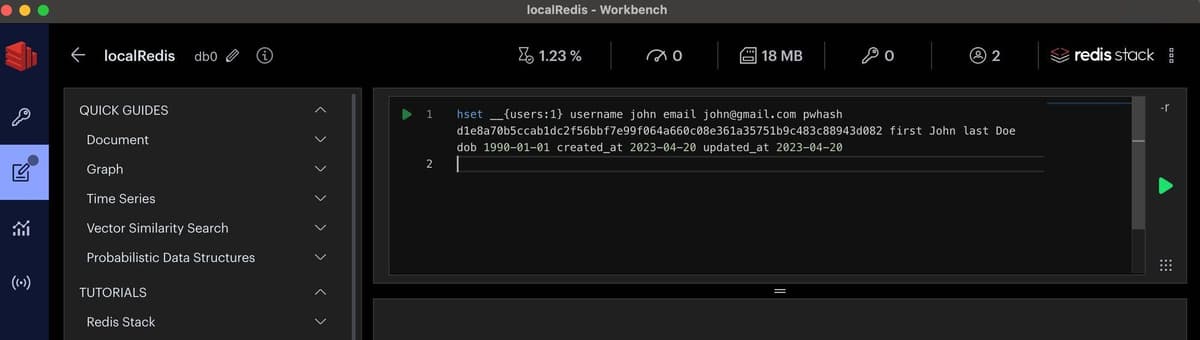

The next step is to verify that RedisGears is syncing data between Redis and PostgreSQL. Note that in our Python file we specified a prefix for the keys. In this case, we specified __ as the prefix, users as the table, and id as the unique identifier. This instructs RedisGears to look for the following key format: __{users:<id>}. Try running the following command in the Redis command line:

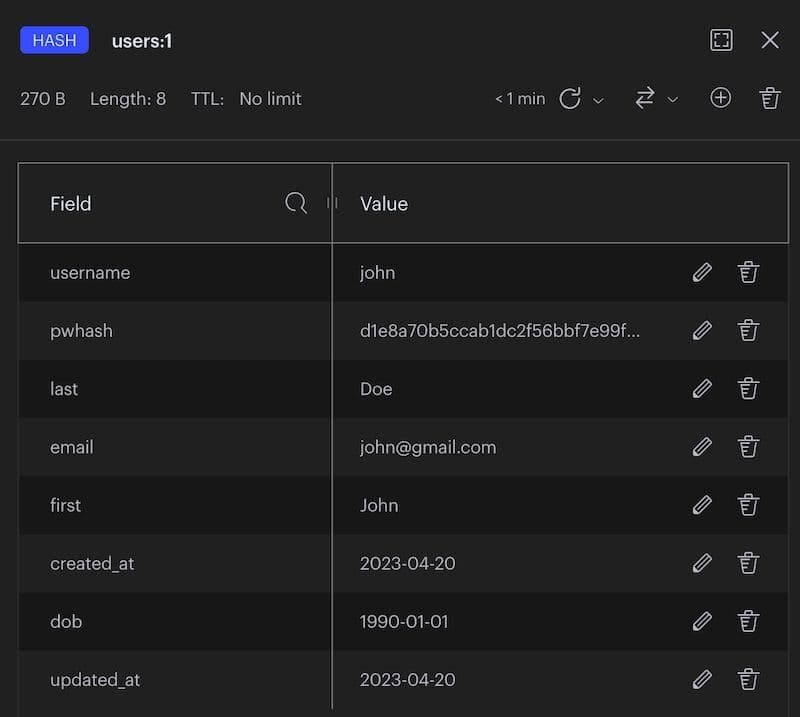

Check RedisInsight to verify that the hash value made it into Redis. After RedisGears is done processing the __{users:1} key, it will be deleted from Redis and replaced by the users:1 key. Check RedisInsight to verify that the users:1 key is in Redis.

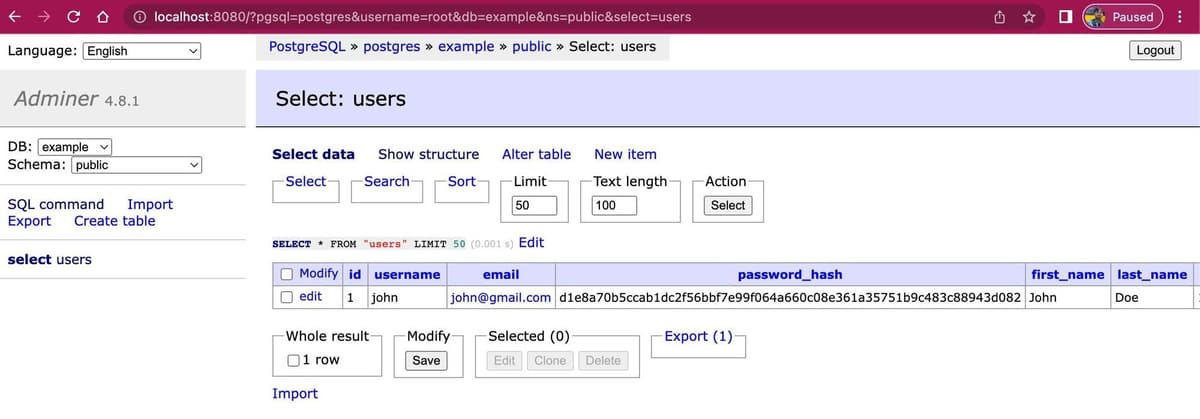

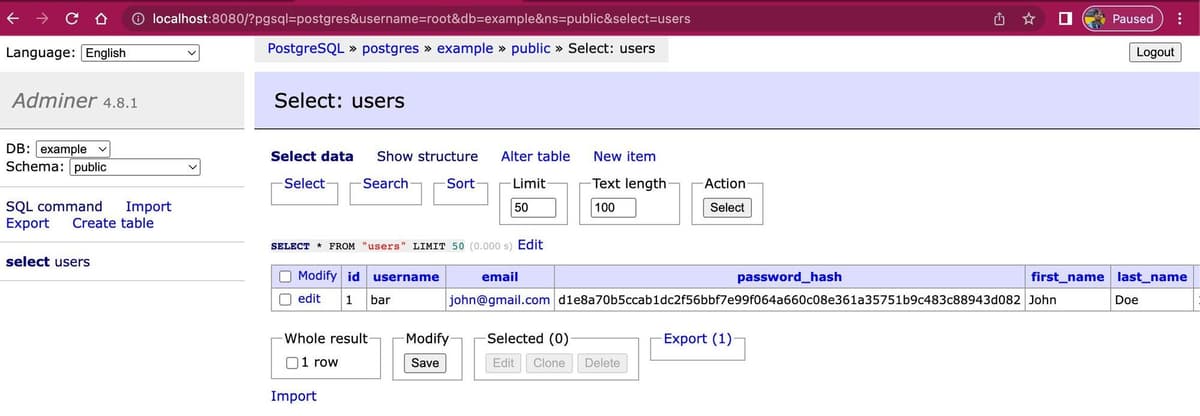

Next, confirm that the user is inserted in PostgreSQL too by opening up the select page in Adminer. You should see the user inserted in the table.

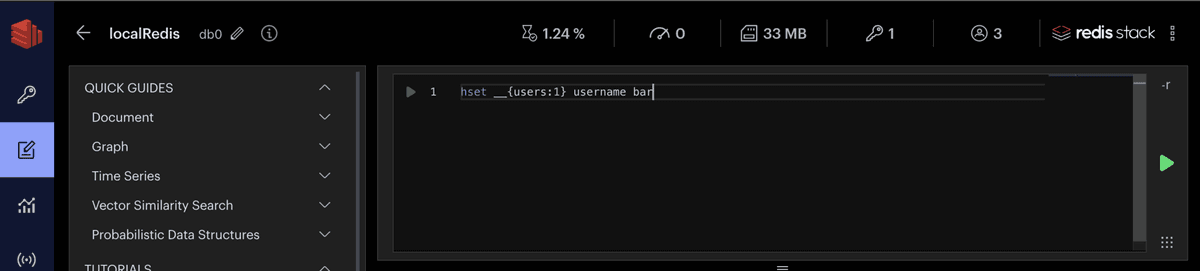

This is how you can use RedisGears to write through to PostgreSQL, and so far we have only added a hash key. You can also update specific hash fields and it will be reflected in your PostgreSQL database. Run the following command to update the username field:

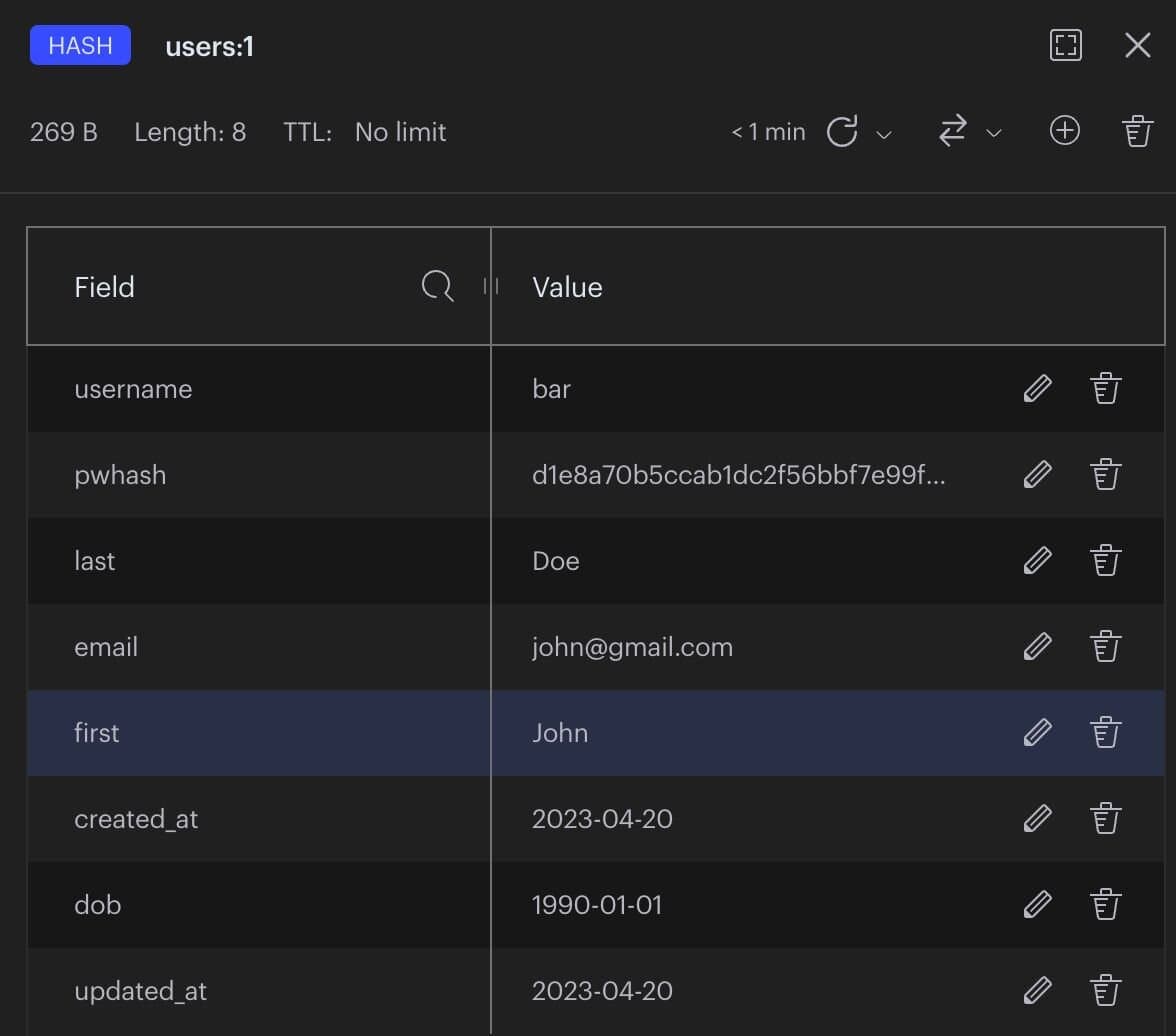

In RedisInsight, verify that the username field is updated

Now go into Adminer and check the username field. You should see that it has been updated to bar.

Ready to use Redis for write-through caching?

You now know how to use Redis for write-through caching. It's possible to incrementally adopt Redis wherever needed with different strategies/patterns. For more resources on the topic of caching, check out the links below:

Additional resources

- Caching with Redis

- Redis YouTube channel

- Clients like Node Redis and Redis om Node help you to use Redis in Node.js applications.

- RedisInsight : To view your Redis data or to play with raw Redis commands in the workbench

- Try Redis Cloud for free